Most digital marketers understand the fundamentals of SEO (search engine optimization), but few go deep into the technical mechanisms that power search engines. One fundamental but often misunderstood component is search engine crawlers and crawl budgets.

In this piece, we’ll dive into how crawling works, what a crawl budget is, and why it’s important for SEO. We’ll also share some best practices for optimizing your crawl budget to increase your website’s chances of ranking higher and faster in search engines.

Understanding Crawling, Crawlers, and Crawl Budgets

Before any webpage appears in search results, it must first be discovered and understood by search engines like Google.

This process typically begins with crawling: Search engines use crawlers (also called bots or spiders), to follow links from one page to another. The crawlers collect data about each page as they go. But running enough bots to index a significant portion of the internet is expensive, so search engines typically employ a crawl budget to help limit costs.

Before we discuss crawl budgets in more detail, let’s look more closely how search engine crawlers operate:

As mentioned above, crawlers systematically browse the web to find new and updated content, ensuring that data about the most current version of each site they crawl is stored for use by the search engine. There are more than four billion indexed pages of content on the internet, and the average size of each page is roughly two megabytes — meaning that you’d need more than seven petabytes just to store the raw data from the small portion of the web that is currently indexed.

That might seem like a manageable number, but it doesn’t include metadata for each page, the relationships between pages, or the fact that the average page size and the number of pages are growing every year. Based on data that Google released in 2008, people estimate that the company now processes hundreds to thousands of petabytes every day. So it clearly takes more than a few petabytes to run a real search engine.

At that scale, managing data is incredibly difficult, so search engines want to collect only data that they actually need, only as often as they actually need it. They would waste a lot of storage and computing resources if they tried to crawl every single page on the internet every single hour, so they’ve built mechanisms that limit their efforts while maintaining the most up-to-date index they can. This is where crawl budgets come in.

Search engines set a crawl budget for each site they index to ensure that they don’t waste resources collecting data from sites that update infrequently or have consistently low-quality content. Websites with higher crawl budgets are indexed more comprehensively and frequently, which increases their visibility in search results. Conversely, sites with lower crawl budgets will not be crawled as frequently, or they may not be crawled completely each cycle, leaving some content invisible to search engine users for days, weeks, or months.

The relationship between crawl budget and website visibility hinges on how effectively a site can guide search engine crawlers to its most important pages. If crawlers spend too much time on irrelevant portions of the site, they may miss content changes in key areas, leaving valuable pages out of their index. Similarly, if sites rarely add new content or their content isn’t linked to often, search engines will crawl the site less frequently to look for updates.

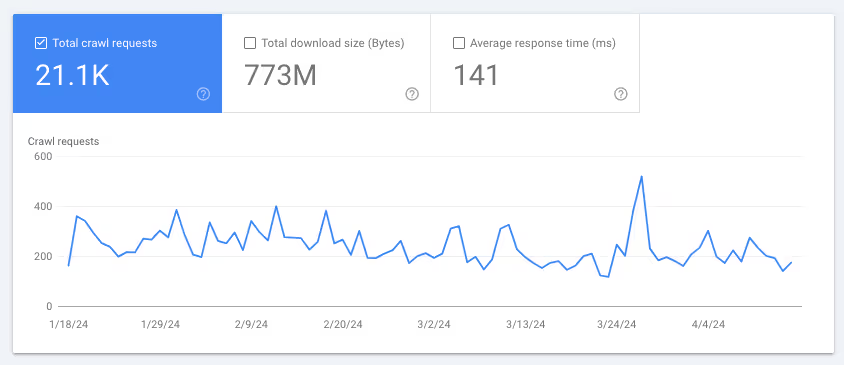

To understand your crawl budget and how often Google currently crawls your site, you can use Google Search Console’s (GSC’s) Crawl Stats report.

This report allows you to check how many pages are typically crawled per day, identify crawl errors, and understand how Google views your site structure. While Google isn’t the only search engine, it has enough market share that its reports are statistically and directionally significant. This data can help you make informed decisions about site architecture, content updates, and server performance improvements to maximize your SEO efforts.

Mastering your crawl budget is not just about allowing Google to crawl your site — it's also about making sure it crawls the right parts of your site efficiently. Understanding this will enable you to prioritize SEO efforts, leading to better visibility and, indirectly, higher search rankings. In the next section, we’ll look at the most important factors search engines use to determine your crawl budget, as well as how you can optimize your site to get the most out of your crawl budget.

Factors Influencing Crawl Budget and How to Optimize Them

At a high level, search engines will set a crawl budget for your site based on two dimensions: demand and capacity.

Demand is based on the number of high-quality pages a site has, how often they’re linked to, and how frequently they’re updated. A site’s capacity is its ability to handle requests quickly (that is, site speed) and the number of crawlers search engines have available.

These two dimensions can be further broken down into a few factors that you can optimize. Let’s look at each of them and some specific tactics you can use to improve them, so you can increase your crawl budget and use your existing crawl budget more efficiently.

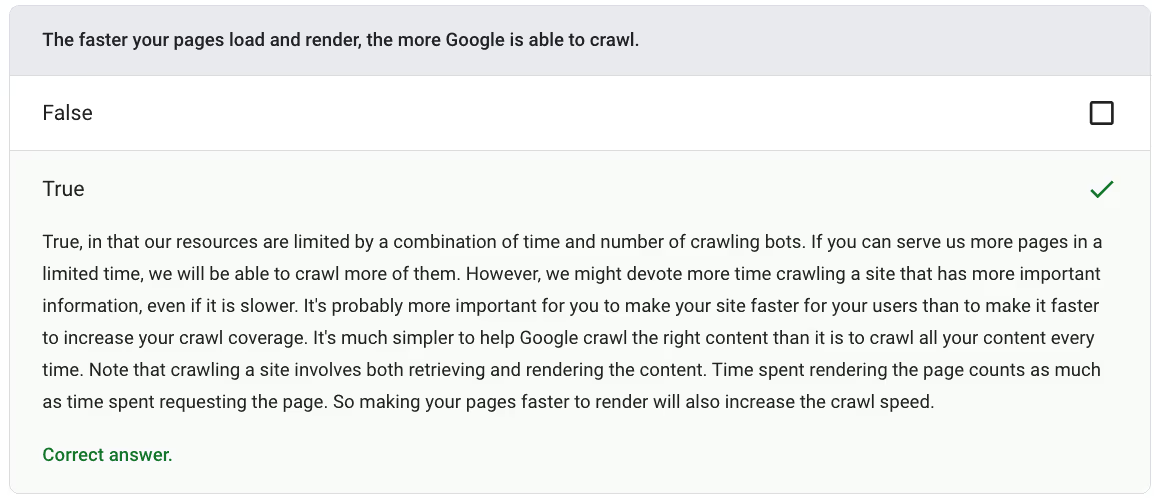

Site Speed

Search engines favor websites that load quickly. A faster site allows a crawler to scan more pages in a given amount of time, thus using the allocated crawl budget more efficiently. A faster site also improves user experience, so in most cases, this is one of the best areas to focus on first if you’re looking to improve SEO.

Optimizing your site’s page speed and addressing any issues with Core Web Vitals can also encourage search engines to crawl your site more frequently, thus increasing your crawl budget.

Website Size and Complexity

In general, a smaller, simpler website will be easier for crawlers to index than a large one. Websites with thousands or millions of pages face unique challenges, as they need to ensure that their most important content is crawled and indexed efficiently. Similarly, complex sites with deep architectures and numerous subdomains might struggle to ensure that crawlers get to all of their content.

If you run a large, complex site, make sure your navigation and link structure are as simple as possible. Use sitemaps to ensure that crawlers don’t waste time on slow or unnecessary pages, and make sure your best pages are linked to often from other popular sites. This will help increase your overall crawl budget, and ensure that the most valuable pages are crawled first when possible.

Content Quality and Freshness

Search engines aim to give users the most relevant and up-to-date information, so websites that regularly update their content with high-quality, engaging material will attract more frequent crawling and larger crawl budgets. That’s why high-quality news sites like The New York Times or The Wall Street Journal are crawled multiple times per day.

Remove thin content and duplicate content, ensure that every page you publish is valuable, and pay attention to general SEO best practices. In addition, you should be publishing new content or significant page updatesas frequently as possible to signal to search engines that your site deserves more frequent visits.

Technical Issues

Technical problems like broken links, excessive or redundant redirects, and duplicate content can negatively impact your crawl budget. When search engines encounter these issues, they waste valuable time navigating through problematic links or redundant pages, so they have less time to discover your site’s unique, valuable content.

Perform regular audits and keep an eye on your GSC Crawl Stats report in order to identify and fix these errors quickly. Implementing the right kind of redirects, using canonical URLs to handle duplicate content, and repairing broken links can help preserve your crawl budget for more important pages and ensure that search engines don’t penalize your site.

Server Capacity and Response Time

Your servers’ ability to handle requests efficiently plays a critical role in determining how often search engines will crawl your site and how many pages they’ll reach when they do. If a server is slow or frequently down when crawlers visit, they may reduce the frequency of their visits to avoid overloading your server, leading to fewer pages being crawled and indexed.

Improving your server’s response time and capacity will almost certainly require some work on the part of your web development team, but it might just be as simple as increasing your hosting plan. It’s tempting to use the cheapest hosting possible, but keep in mind that poor performance comes with a cost to your SEO results and user experience. Ensuring that your server is reliable and has a fast response time (less than 200ms) will encourage search engines to use their crawl budget more liberally on your site.

By addressing these five factors, you can influence how search engines use their crawl budget on your site, leading to better SEO performance and improved visibility in search results.

How Much Should I Care About Crawl Budget?

If we’re being honest, most websites don’t need to over-optimize for crawl budget. In its Search Central documentation, Google reiterates that you don’t need to read their crawl budget guide if you’ve got a relatively small website or if your webpages are already crawled the same day that they are published.

Crawl budget tends to become a concern once a website has more than 10,000 pages that are relatively dynamic or changing often, or once it has more than one million pages (a large website, according to Google).

However, if you notice that you’ve got a large number of URLs getting flagged as Discovered - currently not indexed in the indexing report in GSC, then crawl budget might be a concern.

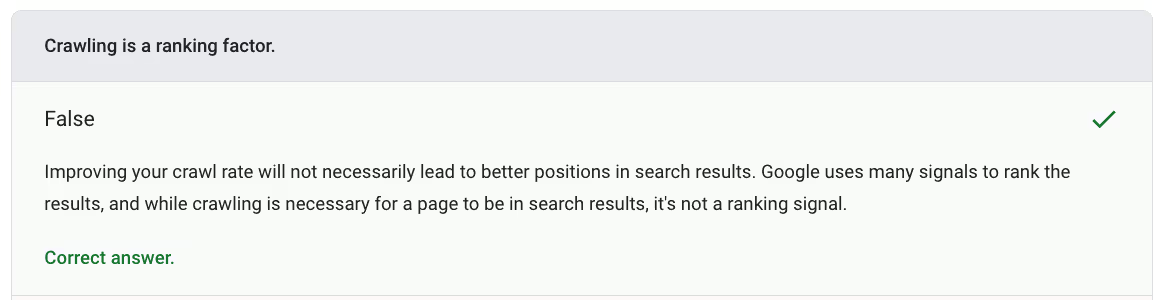

Crawl Budget Isn’t a Direct Ranking Factor

It is worth mentioning that crawl budget isn’t a direct ranking factor. In other words, improving the crawl budget for your website will probably not have a positive impact on any single page of your website. That being said, if you are frequently making positive changes to your pages, and Google isn’t discovering those changes, that in theory, might cause your pages not to rank as well, given that Google hasn’t picked up on those changes.

And, of course, if you are publishing new webpages that aren’t getting crawled, they’d need to be first crawled to appear or rank in search engines.

Final Thoughts

While you don’t have to be a software engineer to work in SEO, having a basic understanding of how search engine crawlers work and the way they set and use budgets will help you prioritize your SEO efforts. Keep an eye on your GSC Crawl Stats report, and take any opportunity you can to improve site speed, lower site complexity, increase content quality, resolve technical issues, and improve your server’s responsiveness.

If you’re looking for more tools to improve your content marketing and SEO efforts, then check out Positional. Their modern SEO toolset can help you find new opportunities to optimize and increase your content output.