Having high-quality original content is mission-critical to any content marketing or search engine optimization (SEO) strategy. However, according to one study, as many as 29% of webpages have duplicate content issues.

Duplicate content is simply content that exists in multiple places on the internet, either internally on a website or externally on other websites, without a canonical tag in place. Duplicate content issues are very common and can have a negative impact on a website’s performance in search engines.

For search engines like Google, duplicate content creates a number of issues. For one, if Google is confused as to a piece of duplicate content’s original source, it may impact those webpages’ rankings in search engines. In addition, large amounts of duplicate content on a website may cause indexing issues and keyword cannibalization. And in extreme cases, Google may take action to remove websites with large amounts of duplicate or scraped content that seems to have been published to manipulate search results.

For example, if you publish a webpage on your site with content that was heavily copied from another site, Google may be unsure which version of the content to include in its search results, and it is unlikely that they’ll show both versions of a very similar page. And if you copy content from other pages on your website, it’s hard for Google to see how those URLs are uniquely valuable for searchers.

In this article, we’ll explain how duplicate content hurts SEO, highlight the four different types of duplicate content that you should be aware of, and show you how to identify and fix duplicate content problems, including through canonicalization.

How Does Duplicate Content Hurt SEO?

Duplicate content issues can have significant negative effects on search engine rankings and traffic.

Indexing Problems

When Google crawls your website, they’re working to understand and index the pages that appear to be valuable. If you have large amounts of duplicate content on your website, whether it’s internal or external, Google will probably not index these pages. And if a webpage isn’t indexed, it won’t appear in search results and won’t drive traffic back to your website.

From Google’s perspective, if a webpage is highly duplicative of another, there’s very little value in indexing and ranking that page alongside its duplicate. Moreover, doing so might be really confusing to searchers.

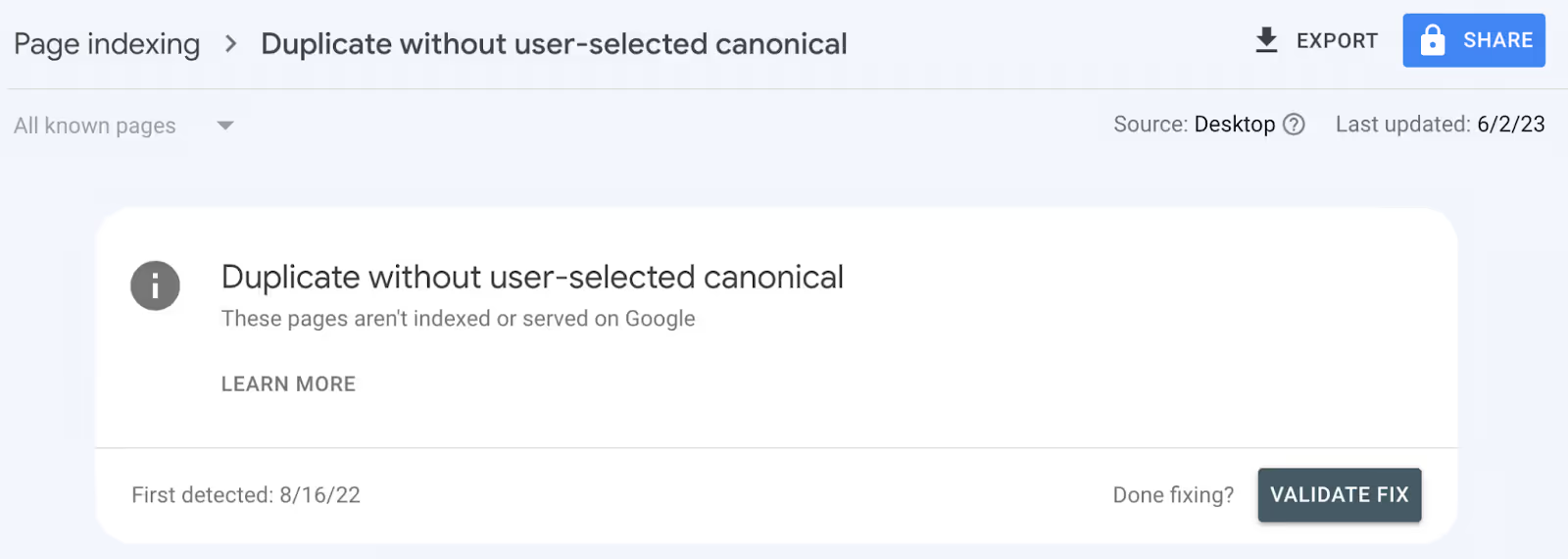

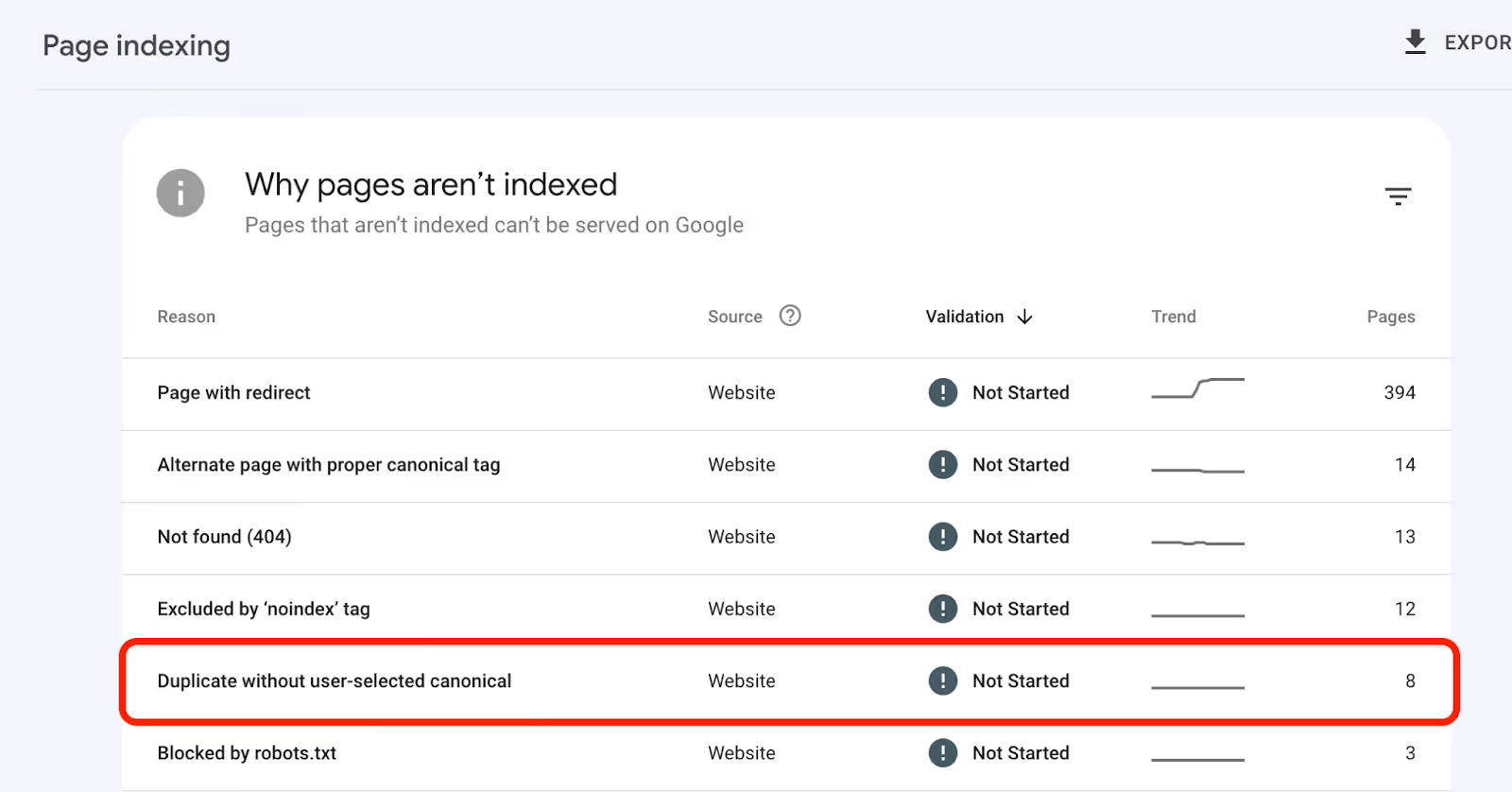

Google will alert you to internal duplicate content issues via Google Search Console:

If these issues exist, it’s likely that Google will also alert you that these pages are discovered but currently not indexed. More on this later.

However, Google will not generally alert you to external duplicate content issues in Search Console unless the issues are significant. If you’ve copied another website, it’s highly likely that your webpage won’t index in Google search. And if someone else has copied your content, it may cause indexing issues for your website, too. There are tools for detecting externally duplicative content, including tools offered by Positional — more on this later, too.

Keyword Cannibalization

Keyword cannibalization occurs when you have two very similar or identical pages on your website that are both indexed and attempting to rank for the same keyword. To Google, it will be unclear which of your pages should be ranking for the given keyword. And as a result, both pages will rank more poorly than they would otherwise.

If you’re reusing substantial amounts of content on multiple pages, then you likely have duplicate content issues, and there’s a high probability that you’ll also experience cannibalization issues.

Cannibalization often happens when there are many doorway or thin pages created programmatically — for example, pages with very similar topics like “best life insurance in Charleston, South Carolina” and “best life insurance in Mt. Pleasant, South Carolina.”

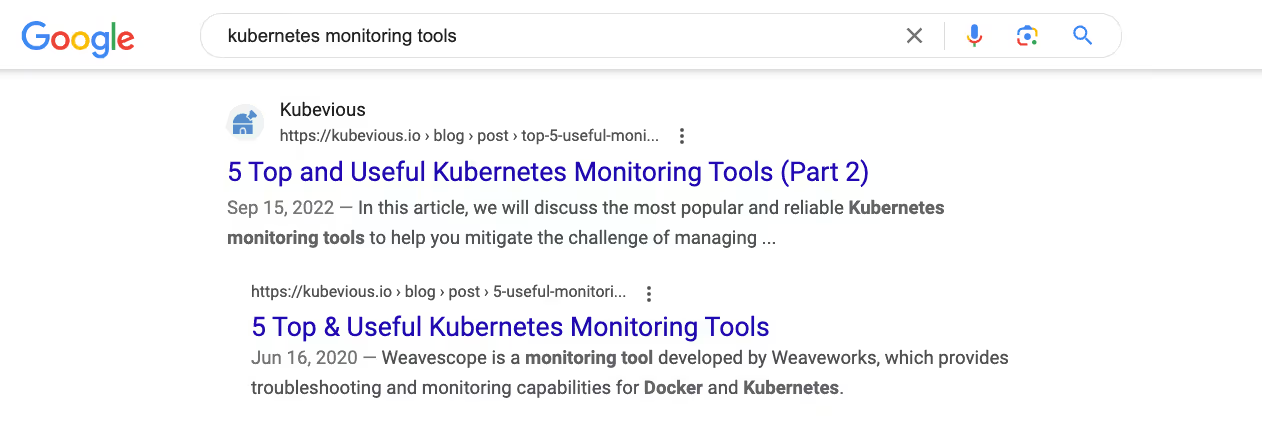

As a quick example, keyword cannibalization looks like this:

This website has two very similar articles about Kubernetes monitoring tools — parts one and two of a series, ranking on the third page of search results. To Google, it’s unclear which page should be ranking for the primary term. Depending on the website’s domain authority, you’ll typically see websites with keyword cannibalization issues appearing on pages two through four of search engine results pages (SERPs).

Internal Linking Challenges

Internal linking is an important part of any technical SEO strategy. Internal links help Google’s crawlers understand what your website is about and how your webpages are interconnected. Internal links are also very important for distributing PageRank, or link equity, across pages on your website.

If you have a large number of duplicate pages, prioritizing internal links across pages becomes difficult. In other words, if you have two virtually identical pages, choosing which page to internally link to from your website’s other pieces of content can be impossible.

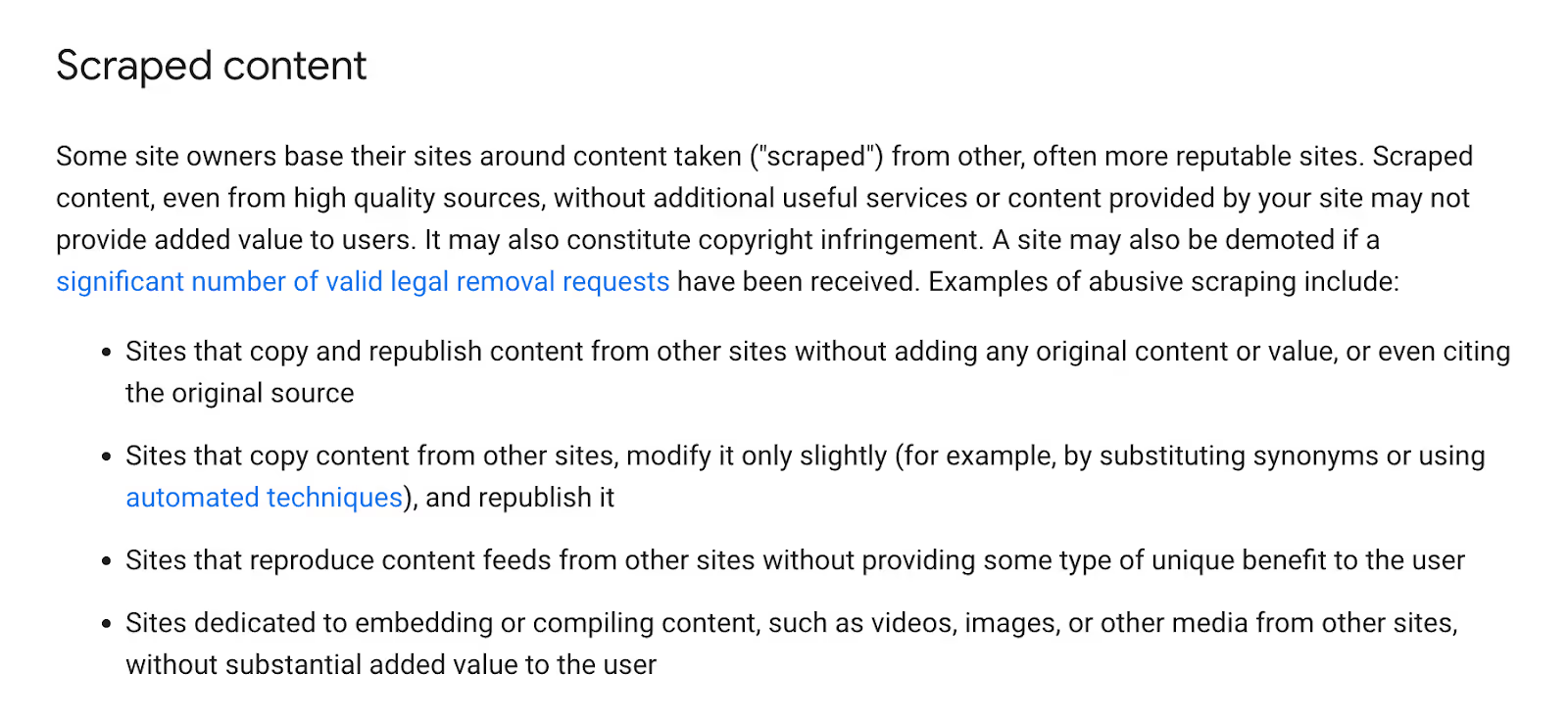

Google’s Duplicate Content Penalties

Google’s Search Essentials guidelines include an in-depth explanation of the different types of spam that they’re targeting and that you should avoid. They go into detail on scraped content, or duplicate content that was taken from other websites without permission:

If Google detects duplicate content on your website, either algorithmically or as a result of a large number of removal requests, they will take action against your website. While receiving a duplicate content penalty is rare, it is possible, and the impact on your website can be monumental. For example, Google could remove your entire website from its SERPs.

Types of Duplicate Content

There are four main types of duplicate content.

1. Copied or Scraped Content

The first and most obvious type of duplicate content is content that has been substantially copied from one page to another, either internally or externally.

For example, if you have a large number of similar product pages on your website, you may be using boilerplate copy across all of them. Alternatively, you may have done a large number of blog posts as part of a series, and you might be reusing intro copy and/or marketing content within each post. In these cases, you’d have duplicate content across these webpages, and it might be difficult for Google to see how each page is uniquely valuable and worthy of ranking in search results.

On the other hand, you may have knowingly or unknowingly copied a competitor when publishing a new piece of content for your website. If you’re hiring freelance writers, this is unfortunately a very common occurrence.

Similarly, if you’re scraping large amounts of content from other websites as a way to improve the pages on your website, you may be inadvertently copying and pasting too much content from those websites.

2. Republished Content

Republishing your content to different domains (for example, republishing a blog post on Medium) may be a great distribution strategy. However, if you’re republishing your content to third-party websites, you should make sure that the republishing website includes a canonical tag with the original URL on your website.

If you republish content to other websites without using canonical tags, you may confuse search engines as to the original source of that content. As a result, your page may not rank as well in search engines, and may even rank below the republished pages, especially if those pages exist on websites with higher domain authority.

If you’re republishing content to a website that does not offer canonical links, at a minimum try to include a backlink back to your website with a reference that the original version of the article existed on your website. For example, something like “This article was originally published on [insert name of your website]” with a hyperlink on the words “originally published.”

3. URL Parameters

URL parameters are really helpful, both for website owners and for people coming to a website. You can use URL parameters for website tracking and for displaying different but similar versions of a page to a user. However, if you aren’t using canonical tags appropriately, these slightly different versions of your pages can be construed as duplicative to search engines.

If you have a landing page on your website, you may want to customize that landing page to a specific prospective customer using a URL parameter. You could also use a URL parameter on an e-commerce website to specify a different type or version of a product.

For example, you might have a webpage for a shirt on your website located at www.example.com/shirt.

There are likely a few different color options for this shirt. For the green version of this shirt, you might use the URL: www.example.com/shirt?color=green

If you’re using URL parameters to create different URLs with substantially identical content, you’ll want to include a canonical tag specifying the primary URL for this group of pages. More on this below!

4. HTTP vs. HTTPS or www. vs. non-www.

While you should typically serve pages only on HTTPS, there might be edge cases where you need to serve content on an HTTP version, too. Similarly, if you’re using the www. prefix across the pages on your website, you should probably stick to that universally.

However, if you’re delivering live content with both HTTP and HTTPS URL variations, or with a different prefix, you can run into a duplicate content issue. In short, search engines will see these pages as different but duplicative and may be confused as a result.

In this situation, you should use a canonical tag to direct search engines to the primary version of this URL.

How to Check for Duplicate Content

Fortunately, there are a number tools that can spot duplicate content, including both exact duplicates and content that may be slightly rewritten or only a partial copy.

Google Search Console

As mentioned above, Google Search Console will alert you to some of the duplicate content issues happening on your own website. In other words, Search Console will tell you if you have multiple pages on your website that are identical or very similar, if those issues are causing those pages not to be indexed.

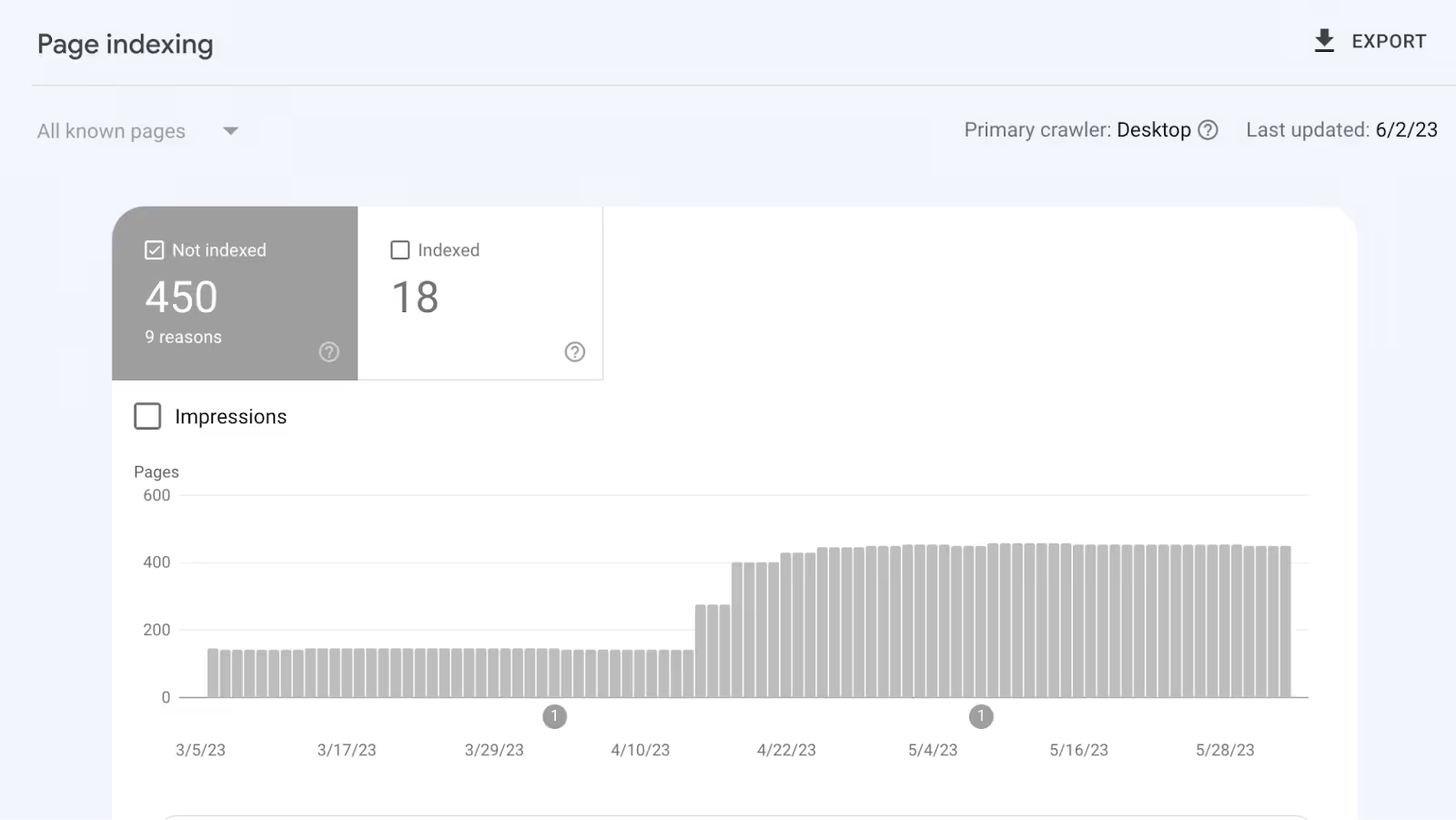

In Search Console, on the right-hand side, you’ll see Indexing. Click on Pages under Indexing. You’ll then see a breakdown of the indexed and unindexed pages on your website:

If you scroll down, you’ll see a section called Why Pages Aren’t Indexed. Look for the reason being Duplicate Without User-Selected Canonical:

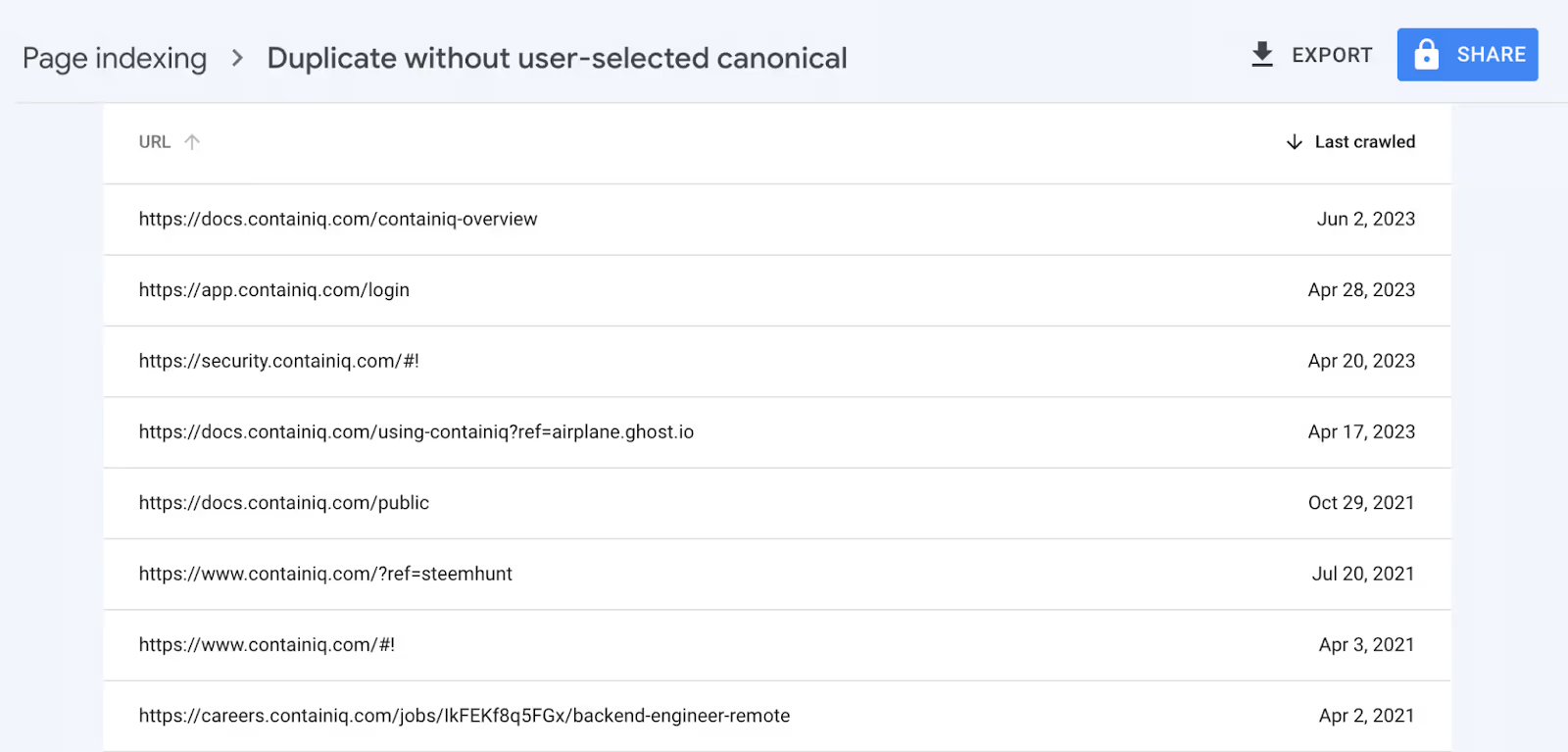

In the example above, this website has eight pages that are duplicative of other pages on the website, and there isn’t a canonical tag in place to tell Google where the original source of that page exists. As a result, Google has chosen not to index these pages. If you click on the result, Google will provide you with a list of the affected pages:

While Search Console is helpful for identifying pages that are not indexed as a result of duplicate content, it’s less helpful in terms of spotting duplicate content within indexed pages, which may also be negatively impacted.

Positional

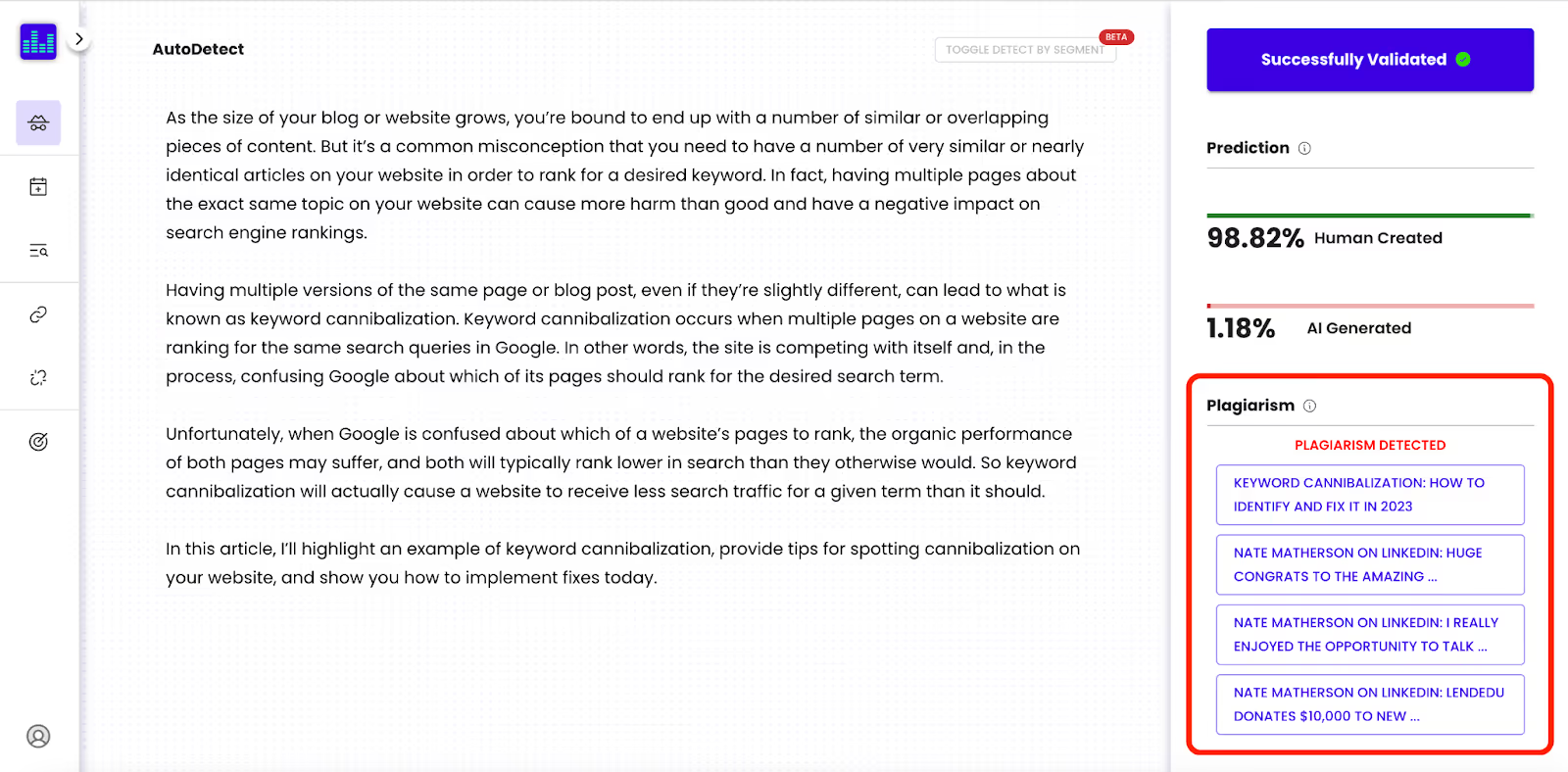

Positional offers a number of tools for content marketing and SEO teams. Our AutoDetect toolset can be used to detect duplicate, plagiarized, and AI generated content.

In Positional, go to our AutoDetect toolset. Then copy and paste the content into the collection window and click on Validate Content:

On the right-hand side of the results page. you’ll see a section labeled Plagiarism. If any of the content is plagiarized or duplicative to another page on the internet, and AutoDetect is able to detect it, the plagiarized content examples will be highlighted in this section. For each result, you can see which parts of the text are duplicate, as well as the overall percentage of each result.

If you’re creating a large number of similar pages on your website, you can use Positional to see how unique those pages are. In addition, Positional offers detection for AI-generated content too. If you’re using AI to generate content, you can check to see how human or how AI your content appears to be, as well as edit that content to make it more original, in accordance with Google’s general recommendations.

As a best practice, you can use Google Search Console alongside Positional.

How to Fix Duplicate Content

Fixing duplicate content issues is relatively easy if you have a smaller website. However, it can become very challenging to implement best practices on very large websites or on websites with substantial amounts of programmatically created content. There are five general steps to take to reduce duplicate content issues and ensure that best practices are implemented.

1. Use Canonicals Properly, Internally and Externally

First introduced by search engines in 2009, canonical tags are essential for overcoming duplicate content issues. Canonicalization is the process of choosing a single URL to represent a group of very similar URLs.

Implementing canonical tags on your pages is a straightforward process. Canonical tags are placed in the <head> section of a webpage’s HTML, using the following format:

<link rel=“canonical” href=“https://example.com/test-page/” />

When you add this HTML tag to a duplicate URL or page, you specify the master or primary URL in the href section. You can include canonical tags to your own pages, as well as to third-party pages if your website is hosting content that has been previously published elsewhere.

In short, the canonical tag tells search engines which version of a page to index and rank in search. Importantly, this also communicates to search engines where the PageRank (or link equity or link juice, as it is commonly referred to) should be passed. For example, if you have a number of very similar URLs that have accumulated backlinks, the canonical tag will pass that page authority to the correct and primary version of that URL.

A content management system (CMS) like WordPress will allow you to specify the canonical tag using a frontend editor. However, if necessary, you can specify these tags by editing the <head> section of the HTML.

2. 301 Redirect Similar Pages

If you have a large number of nearly identical pages and each doesn’t serve a unique purpose , you could, instead of using a canonical tag, combine them into a single more valuable page.

In this case, you’d want to remove the identical pages and 301 redirect the different URLs over to your primary page.

3. Reduce Internal Copied Material

If you have internal duplicate content issues, and your pages aren’t ranking as well as you’d like, you should work to clean up this content.

While it’s hard to say exactly how much content can be reused from one page on your website to the next, I’d personally try to avoid having more than ten or twenty percent of the copy on a page identical to copy on other pages.

This goes for programmatically created content, too. If you’re programmatically creating a large number of location-based pages, for example, you should still work to make each of those pages uniquely valuable with helpful content about each of those specific locations.

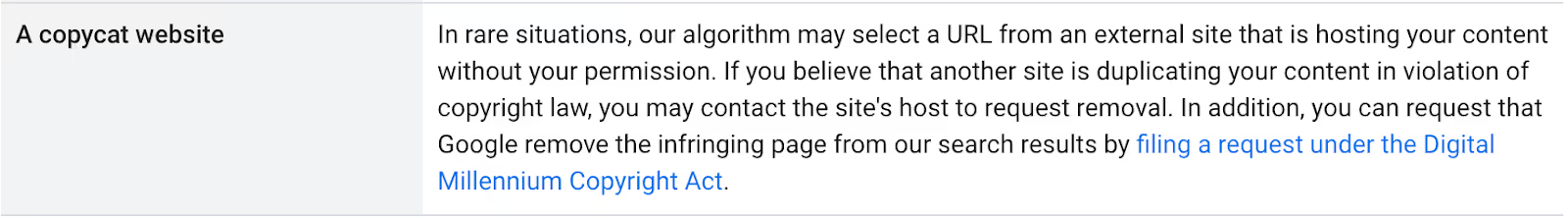

4. Request That External Websites Remove Duplicate Content

If you’re running into duplicate content issues as a result of other sites copying your content without permission, you can take steps to improve the situation.

In the Google Search Essentials guidelines, Google instructs you to request that they remove the infringing page from their search results by submitting a request under the Digital Millennium Copyright Act.

Google does not specify how long it will take for them to review your filing and remove the infringing material from their search results. That being said, Google’s algorithms have become increasingly good at identifying the original source of copied content. And if your content has been published for a meaningful period of time, it will be easier for them to recognize your webpage as the original source of that material.

5. Use the Noindex Tag with Caution

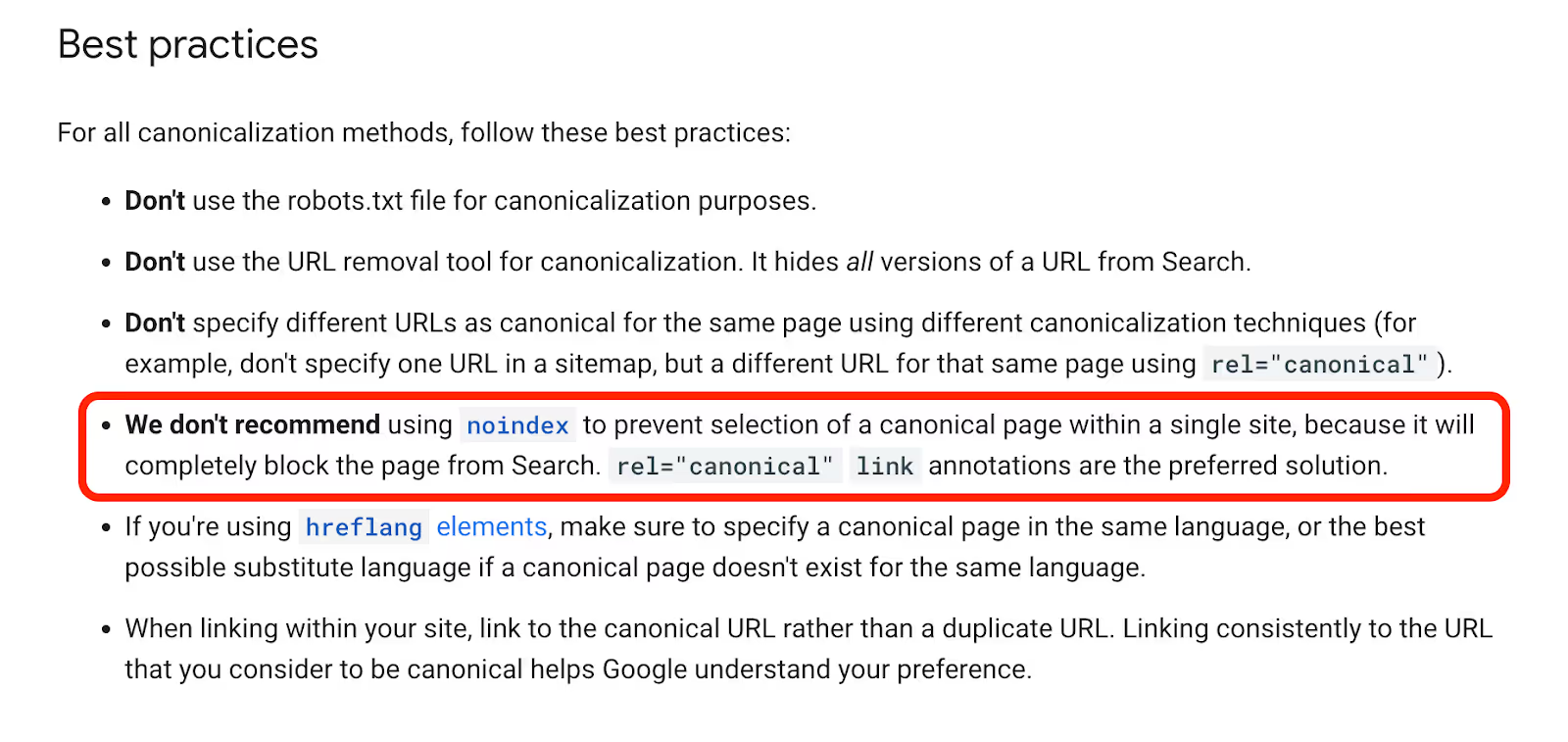

You could, in theory, use the noindex tag as a way to tell search engines not to index or care about a webpage with duplicate content. While this should work, Google has gone out of its way to tell you not to do this in their Search Essentials guidelines:

Instead, you should use canonical links — the preferred solution.

Final Thoughts

Duplicate content issues are worth investigating and fixing as part of your next website audit.

If you’re publishing a large number of similar pages, remember to add substantial amounts of unique content to each page. Otherwise, you might run into internal duplicate content issues that can ultimately lead to indexing challenges and keyword cannibalization.

And as a best practice, make sure that you aren’t copying third-party websites during your content creation process — and as a result creating external duplicate content issues. If other websites are copying your content without permission, you should take action and request that search engines remove the infringing pages from their index.

Duplicate content is often necessary and can be helpful for your business. Whether you’re republishing your content to third-party websites or using URL parameters to serve different versions of content internally, don’t forget to include a canonical URL back to the primary source of the content.

Google Search Console and Positional are helpful tools for spotting duplicate content. Search Console will alert you to any duplicative content where a canonical tag is missing. And Positional allows you to check content for plagiarism on both your own website and external sites.

If you get stuck debugging a duplicate content issue, feel free to reach out to me directly at nate@positional.com — I’d be happy to take a look. And if you’re interested in trying Positional, you can sign-up for our private beta on the homepage of our website.