Being indexed by search engines has many obvious benefits, including increased global visibility and a boost in organic traffic. It’s also a cost-effective marketing approach that builds credibility and trust. But indexing doesn’t just magically happen. Search engines need to crawl your site and discover its pages, and anything you can do to decrease the effort required to do that is going to increase your chances of ranking well.

A critical part of ensuring that your site is indexed is submitting your sitemap to search engines. This post will provide step-by-step instructions on how to do that, but first, let’s quickly go through a quick overview of what a sitemap is.

What Is a Sitemap and Its Purpose?

In simple terms, a sitemap is a specialized text document — most often in the XML format — that lists all the accessible and/or relevant pages of your website. You can think of it as a roadmap or a table of contents for your website, tailored for search engines.

The primary purpose of a sitemap is to inform search engines about your site’s structure, making it easier for search engine crawlers (like Googlebot) to find and index all of your content, which in turn increases the likelihood of your pages appearing on search engine results pages (SERPs). Although sitemaps can grow to be complex enough that splitting them up into multiple sitemaps becomes a practical option, a simple one might look like this:

The first line tells any human or computer reading the file that it’s an XML file, that it’s version 1.0, and that the encoding system is UTF-8. These elements (commonly referred to as “tags” and “properties”) aren’t important for most people to know, aside from the fact that they make for valid XML. For a more detailed overview of what XML is and how it’s structured, check out this explanation from W3Schools.

These are the important tags for someone working on SEO to know:

loc: Specifies the URL related to the following tags.lastmod: Indicates when the page was last changed. This is a crucial tag to keep updated, as Google historically prioritizes fresh content.changefreq: Tells search engine crawlers how often they should expect a given page to be updated. This can be used to optimize the way crawlers utilize your crawl budget. However, do note that this is merely informational, not directive; it’s up to the crawlers to decide how frequently they crawl your site.priority: Informs crawlers about how you believe pages should be prioritized. As with the <changefreq> tag, it’s up to search engines to decide whether they’ll follow your recommendation.

Although it’s a best practice to add all of these tags, and doing so can’t hurt your pages’ performance, there’s debate in the SEO community on whether the <changefreq> and <priority> tags actually help anything.

Submitting Your Website’s Sitemap to Search Engines

The exact steps for submitting a sitemap vary from search engine to search engine. Some of them require the use of proprietary webmaster tools like Google Search Console (GSC), and some rely on other search engines’ webmaster tools. And some search engines rely solely on discovering your sitemap as part of crawling and indexing your site’s pages. No matter the process, the steps outlined in the section below should increase your visibility on SERPs.

How to Submit to Google

According to Google’s official documentation, there are three main ways of submitting your sitemap:

Submitting Through Google Search Console

Adding a sitemap through GSC is as simple as visiting the Sitemaps Reports page in GSC, entering the link to your sitemap, pressing Submit, and then waiting for Google to index it.

Using the Google Search Console API

Google also offers an API that you can use if you have programming skills. You can even submit your sitemap with a simple curl command:

Do note, however, that you will need to understand and utilize Google’s authorization and authentication methods for APIs.

Using Google’s Ping Tool

Last but not least is the ability to use Google’s own Ping tool, which is accessible through a simple GET request — so you need to use only your browser. For instance, submitting Positional’s sitemap with the Ping tool would simply require me to visit the following URL in my browser: https://www.google.com/ping?sitemap=https://www.positional.com/sitemap.xml.

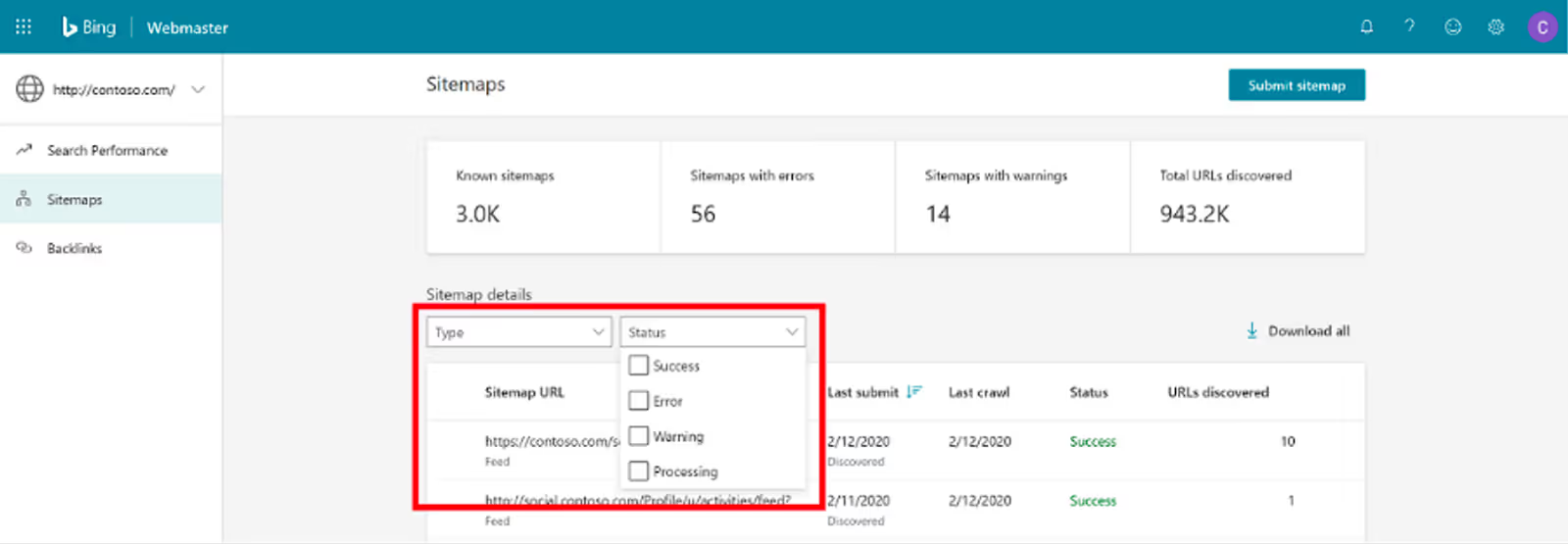

How to Submit to Bing

Submitting a sitemap to Bing is as simple as logging into Bing Webmaster Tools and then selecting your sitemap. When your sitemap is updated — or if it needs to be resubmitted for another reason — this is also possible from Webmaster Tools.

How to Submit to Yahoo

Thanks to the collaboration between Bing and Yahoo, submitting your sitemap to Bing will automatically submit it to Yahoo as well.

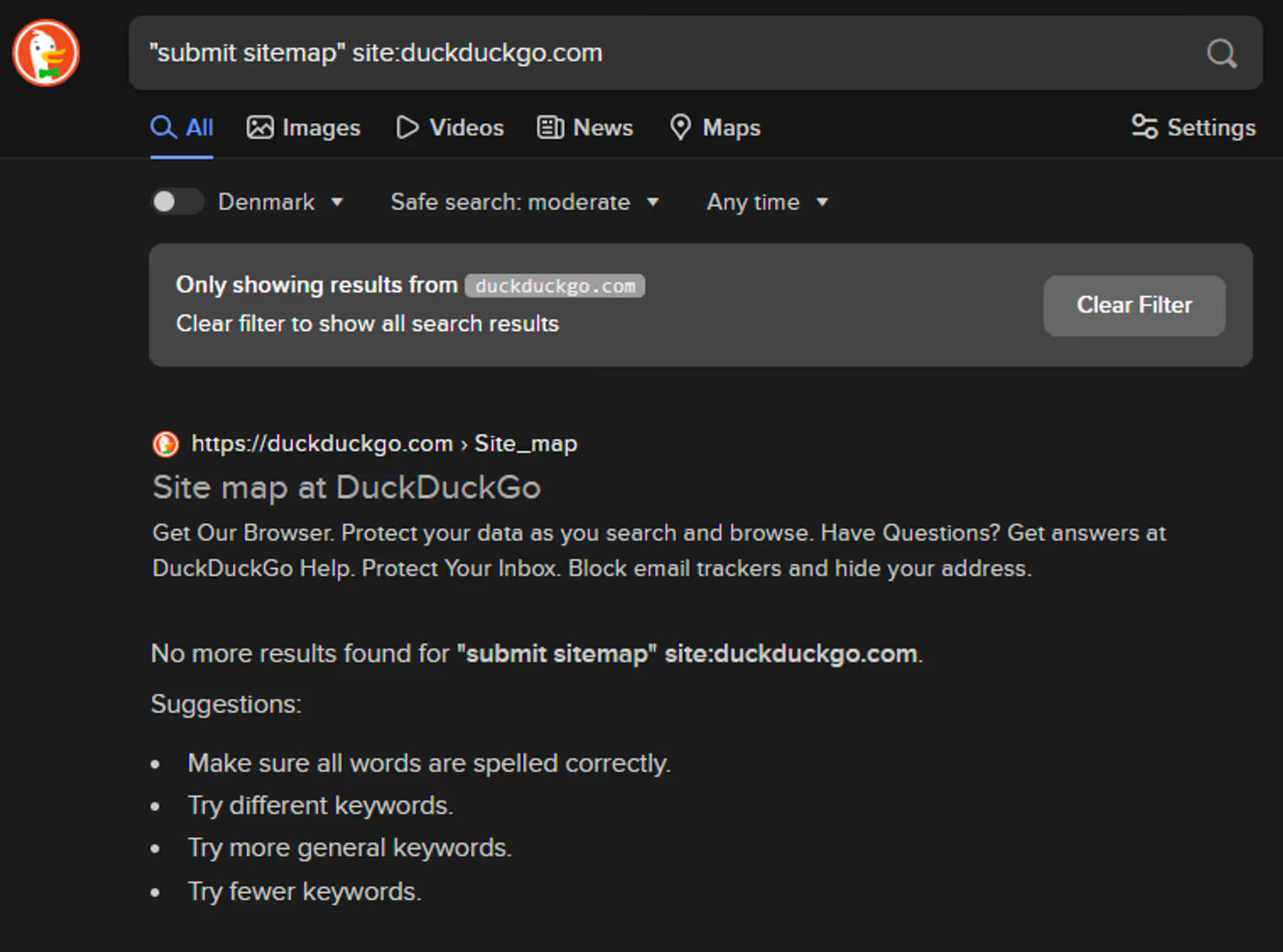

How to Submit to DuckDuckGo

There is currently no way of submitting a sitemap directly to DuckDuckGo.

How to Submit to Baidu

Baidu is a Chinese search engine that’s used very little outside of China, so finding English documentation on submitting a sitemap is challenging. It is, however, possible to find Chinese instructions that can then be translated in-browser.

How to Submit to Yandex

Submitting a sitemap to Yandex is as simple as going to the Sitemap files section in their webmaster portal and then adding your sitemap.

Fixing Common Indexing Issues

When you’re working with sitemaps, there are a number of issues you may run into. Although the list that follows is far from exhaustive, it contains some of the most common ones with the easiest fixes.

With many of these issues, there’s not actually a problem with your sitemap; rather, the issue stems from other crawl functionality overruling what the sitemap is saying.

Excluded with Noindex

By far the most common indexing issue is a rogue noindex tag, which tells search engines to, well, not index a page. These tags are usually used for pages that aren’t intended to be publicly accessible, like admin pages or temporary promotions. However, they’re sometimes added to other pages like blog posts or landing pages as part of a mass migration or other type of site change that affects many pages.

Thankfully, this is an easy issue to troubleshoot — it just requires that you examine the <head> section of your webpages, looking for a <meta name="robots" content="noindex"> tag. If you find that on a page you do want to index, remove it.

There are also a number of tools to help troubleshoot this — for instance, the Ahrefs SEO Toolbar extension.

Blocked by Robots.txt

While noindex tags are easy to troubleshoot, as they’re found on individual pages, sometimes your pages will be blocked by your site’s robots.txt file. A robots.txt file is very similar to a sitemap.xml file, in that it lives in the root directory of your website, with the purpose of providing information to search engine crawlers. Unlike a sitemap.xml file, a robots.txt file is often used to tell crawlers which pages not to crawl. For instance, Ahrefs’ robots.txt file looks like this:

The first line states that the following directives should be respected by anyone trying to crawl the website. It’s also possible to specify a crawler, such as Googlebot. Following this, the file specifies a number of Allow and Disallow lines, with some lines disallowing specific pages like /team-new and other lines disallowing entire directories like /site-explorer/*. This is where you need to be careful.

It may be tempting to write Disallow: /blog/ to avoid “duplicate” content resulting from excerpts of your blog posts being present on the blog overview page. However, this syntax would end up blocking any URL starting with /blog/. You can solve this either by removing the line altogether or by adding an Allow: /blog/* rule to the robots.txt file. However, for a single page, it’s recommended that you instead use the noindex tag mentioned in the previous section.

Redirected Pages

Redirects are a great way of keeping a URL alive even after you’ve made changes to your site’s structure. For instance, you may have updated a blog post’s URL from /2023/05/03/sitemap/overview to /blog/sitemaps, and instead of having the original link result in a 404 page, you make it 301 redirect to the new URL.

However, wrongful use of redirects can have severe consequences. Too many redirects can end up slowing down loading times, confusing search engines, and using up your crawl budget unnecessarily. A common mistake in terms of redirects and sitemaps is to incorrectly use — or forget to use — a “trailing slash”. Let’s revisit the sitemap example from the beginning of this post, and remove the trailing slashes:

On the surface, this doesn’t seem like a big deal, and initially, that may even be true. But as your website starts growing and more pages are added to your sitemap, there’s an increasing likelihood that the exclusion of trailing slashes will impact your SEO performance, as each page will need to be redirected. Effectively, you’re doubling the number of requests a crawler must make when crawling your site.

Note that having a single redirect may not be the end of the world, but redirect chains can have dire consequences.

Duplicate Pages or Content

When multiple URLs have the same or very similar content, search engines may become confused as to which URL should rank for a given keyword. A best-case scenario is that only one of the URLs will rank, but more likely, none of the URLs will rank. This also ties into the bigger concept of keyword cannibalization.

There are many ways of fixing this, from utilizing noindex tags to removing duplicate content altogether. You can find many more tips — as well as a deeper explanation of what duplicate content is and how to find it — in this comprehensive overview of duplicate content.

Thin Content

Having thin content on your website often goes hand in hand with having duplicate content, as you’re covering very closely related topics (or the same topic) on multiple pages. Thin content is characterized by pages that either add little to no value or lack in-depth information.

Avoiding thin content can be fairly straightforward, and you start by consolidating related information on a single page. For instance, this post itself could have been split into multiple posts, such as:

- /what-are-sitemaps/

- /how-to-submit-sitemaps

- /common-indexing-issues/

- /fixing-common-indexing-issues

When you’re working to avoid thin content by grouping related ideas on one page, it’s very important to denote the different sections with relevant and easily understood headers — not only to make it easier for readers to navigate but also to make it easier for search engines to parse the text and determine the keywords the page should rank for.

Final Thoughts

Generating and submitting a well-formatted sitemap is an important step in your SEO journey, as it’s essentially like providing search engines with a roadmap of your site, making it much easier to navigate and subsequently rank. However, it is only a first step.

Submitting your sitemap to Google Search Console is a great first step. And it will only take a few minutes to do so.

If you want to learn more about content marketing and SEO, Positional’s blog and podcast are great places to start.