In SEO terms, saying that a webpage has been indexed means that Google has crawled it and made it accessible via Google’s search engine.

Indexing is incredibly important for content marketers, because if our webpages aren’t indexed, they won’t rank in organic search results or drive traffic back to our websites.

Indexing should generally happen naturally as Google crawls your website and discovers the awesome webpages you’ve created. However, indexing issues are fairly common for new or small websites or websites that are very, very large, with many hundreds of thousands, or millions, of pages.

Fortunately, checking for indexing is fairly straightforward. And there are steps you can take to increase the chances of Google indexing your webpages. In this article, we’ll show you how to check for indexing, provide suggestions for improving indexing, and highlight a few common indexing issues.

Indexing 101

Since its inception, Google’s mission has been to organize the world's information and make it universally accessible and useful. Of course, there are multiple search engines that you might want your webpages to be indexed by, such as Bing and DuckDuckGo. However, in this article, we’re going to focus on Google’s search engine, given Google’s significant search market share.

In order to discover the world’s information, Google uses crawlers (the generic name for these crawlers is “Googlebot”) to find and understand the information contained on your website. Google has only so many resources, and it will dedicate different amounts of those resources to crawling websites on the internet.

As Google and other search engines crawl the internet, they index pages that they believe are helpful for searchers. After being indexed, these webpages will be discoverable on search engine results pages (SERPs).

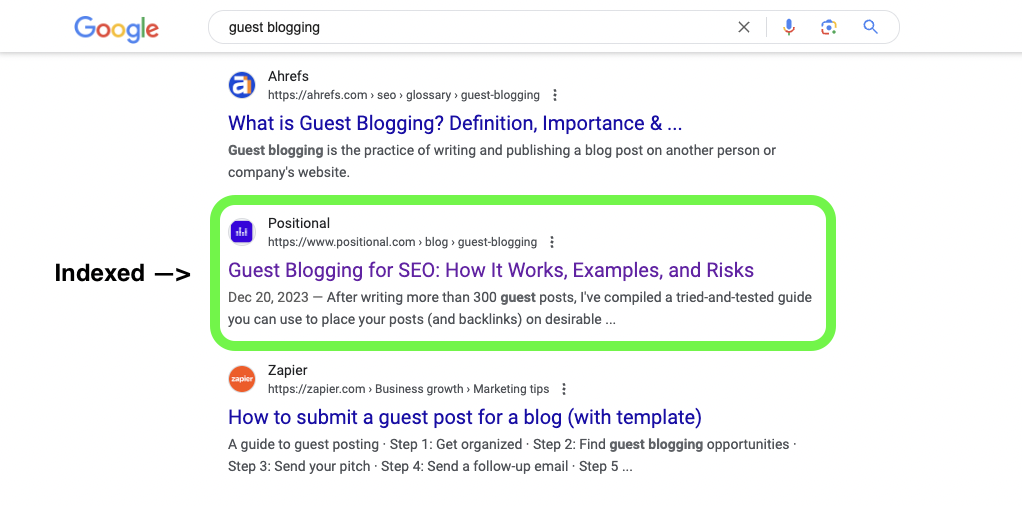

Our blog post about guest blogging is shown as indexed and ranked.

Today, Google predominantly uses the mobile versions of your webpages when crawling, indexing, and ranking your webpages. This is typically referred to as mobile-first indexing.

If you haven’t already done so, you’ll want to submit your sitemap in Google Search Console (GSC). Your sitemap includes all of the URLs on your website, and by submitting it to Google directly, you’re providing clear instructions as to which pages on your site should be indexed. (More on this later.)

How Long Does Indexing Take?

For new or small websites, it can often take some time for new webpages to be indexed and then to rank in search results. In the Google Search Console Help Center, Google says that you should allow at least a week before assuming that there is a problem.

However, there are a lot of potential variables in play here. For example, new websites often experience delays in indexing. When we first started our blog at Positional, it would often take a couple of weeks for our new webpages to index.

But over time, you’ll find that indexing will happen faster and faster. Today, our blog posts are typically indexed within a day after publishing. As you build a rapport with Google, they will want to crawl your website more regularly. Before Positional, I scaled a blog to 200,000 visitors per month; it got to the point where new content would typically index within a few hours, given that Google was crawling our website multiple times per day.

It is worth mentioning that if you’ve recently published a large number of new webpages as part of a programmatic SEO strategy, for example, it might take longer for Google to dedicate its resources to crawling all of your new web pages.

How to Check for Indexing

There are several different approaches to determining whether your webpages are indexed in Google’s search results.

Check Manually

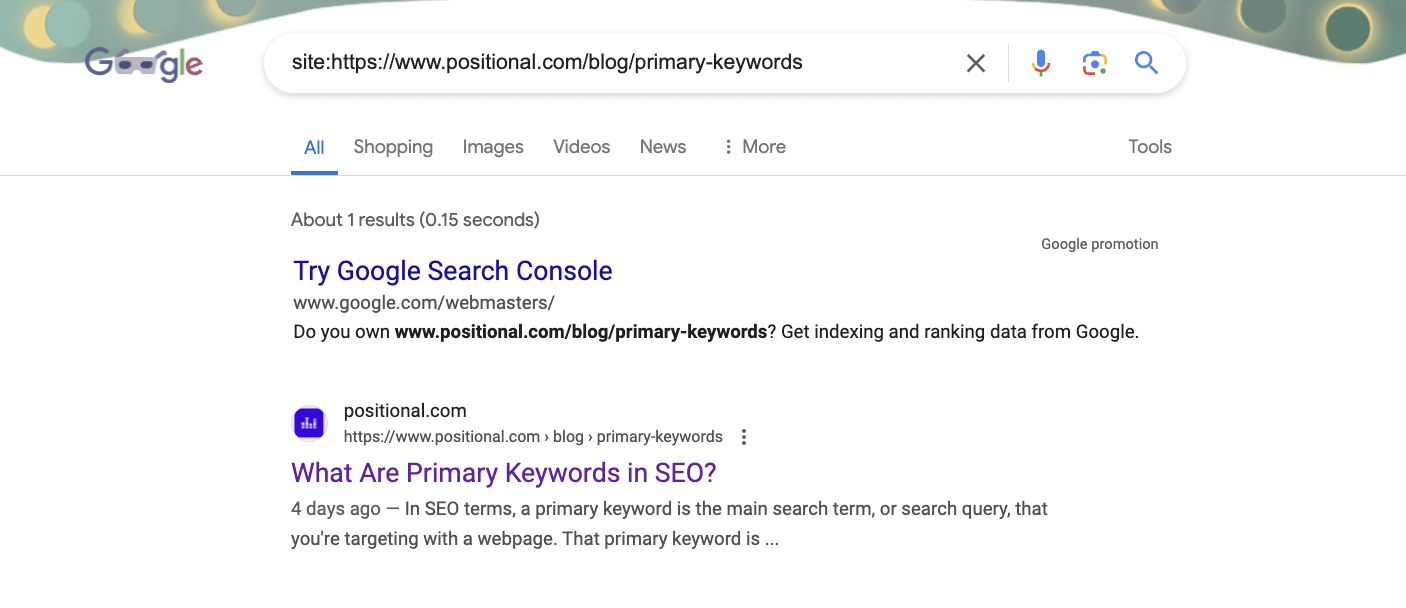

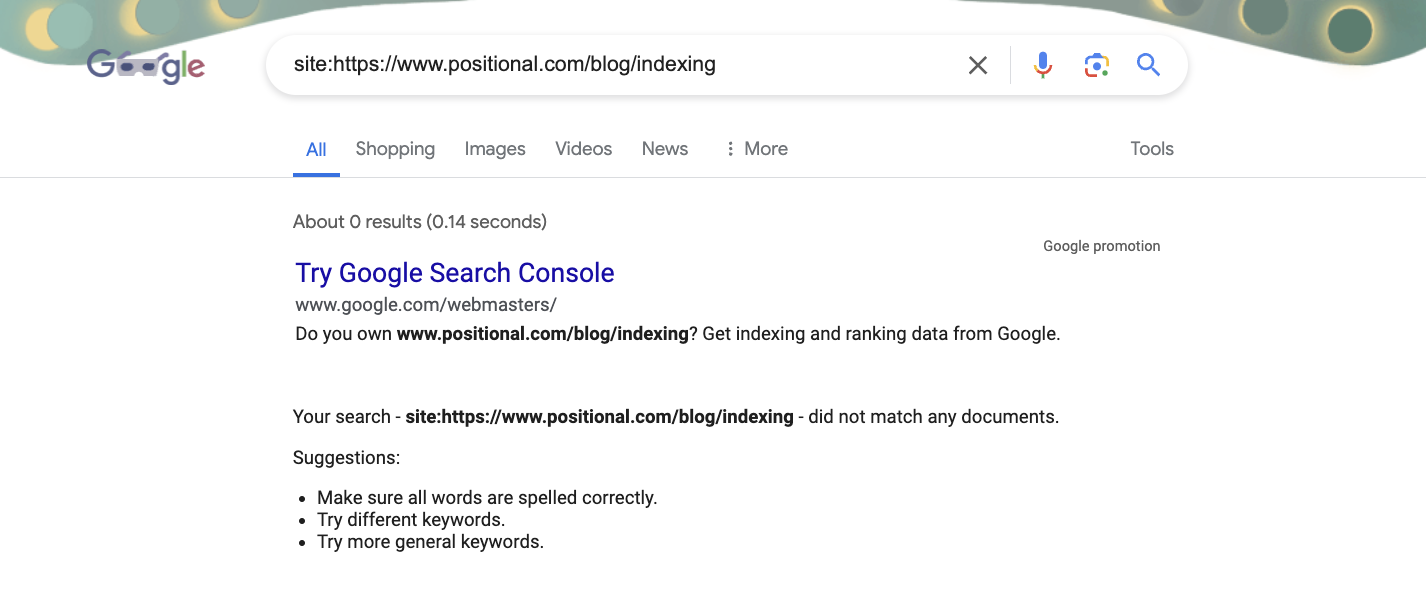

If you’re pressed for time, you can quickly check to see whether a webpage is indexed in Google’s search engine by using the site search functionality.

Simply go to Google, type “site:” and then the page’s URL (with no space) into the search bar, and then hit enter or return:

If a search result appears for your URL, then the URL has been indexed into search results. However, if you don’t see a result, the webpage hasn’t been indexed just yet:

This is a fairly manual process, and if you’re dealing with a large number of URLs, GSC will provide a more robust experience.

Check Using Google Search Console

GSC provides several tools that enable you to check for indexing and monitor page performance.

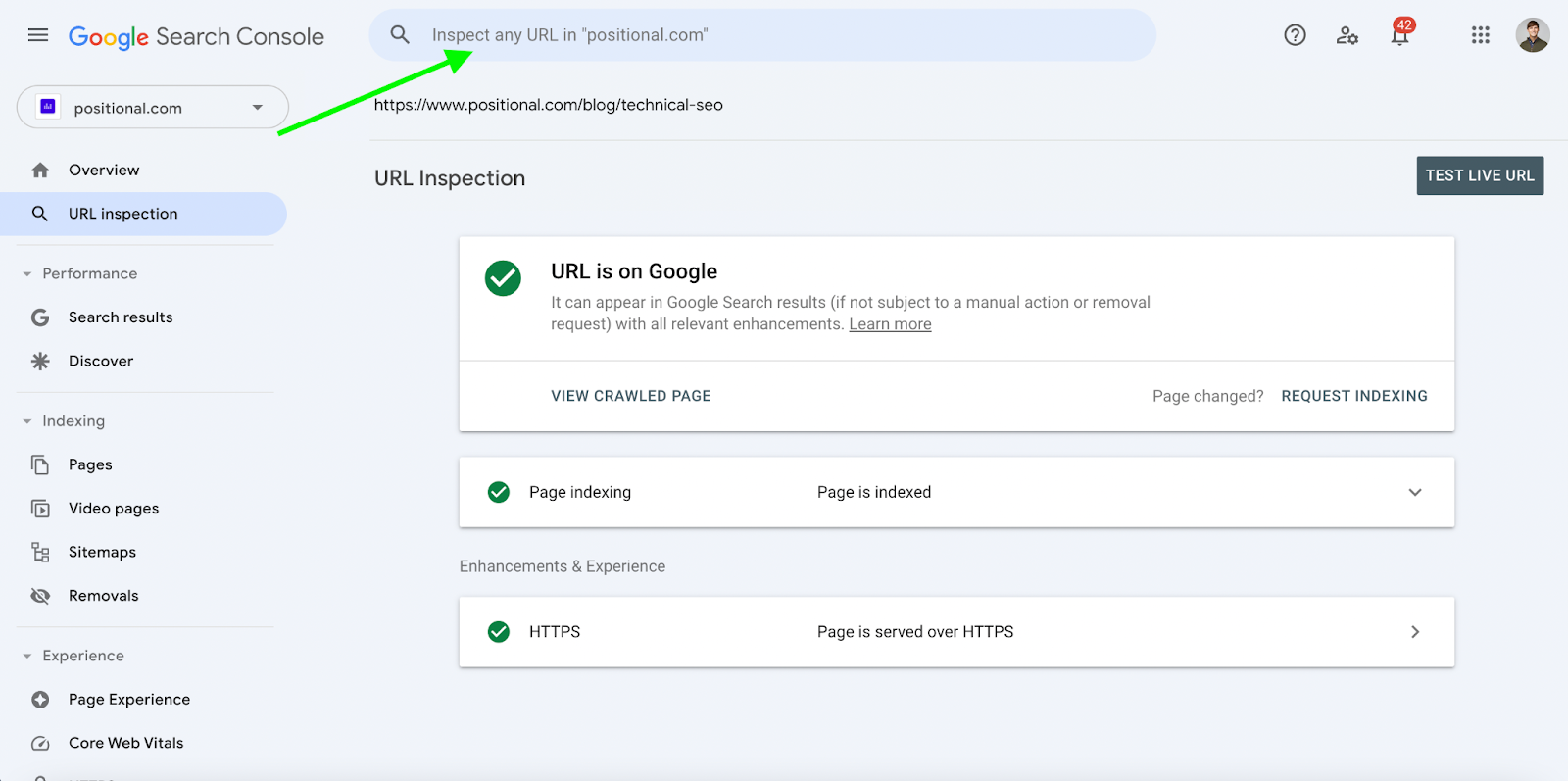

The first approach would be to inspect a URL using GSC. Input the URL in question into the search bar at the top of GSC, and press enter or return. Google will then provide you with the status of the URL you entered (it may take a few seconds to load):

If the URL has been indexed, you’ll see a green checkmark and a confirmation that the URL is indexed on Google (as shown above). You can also expand the page indexing accordion to see whenGoogle last crawled the URL and the method by which Google discovered the URL.

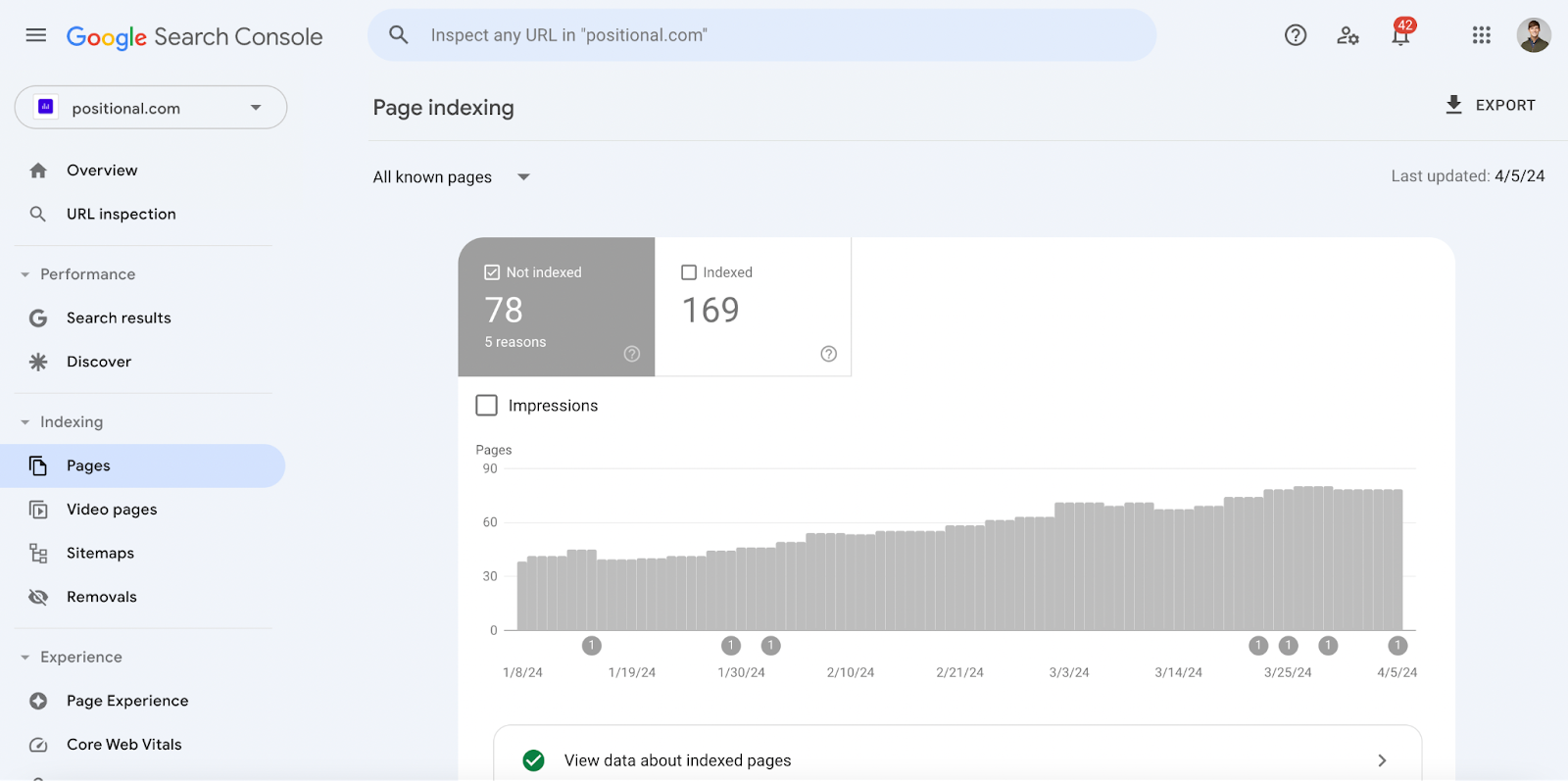

If you’re dealing with a large number of URLs, an alternative approach would be to check for indexing using the Indexing > Pages section of GSC:

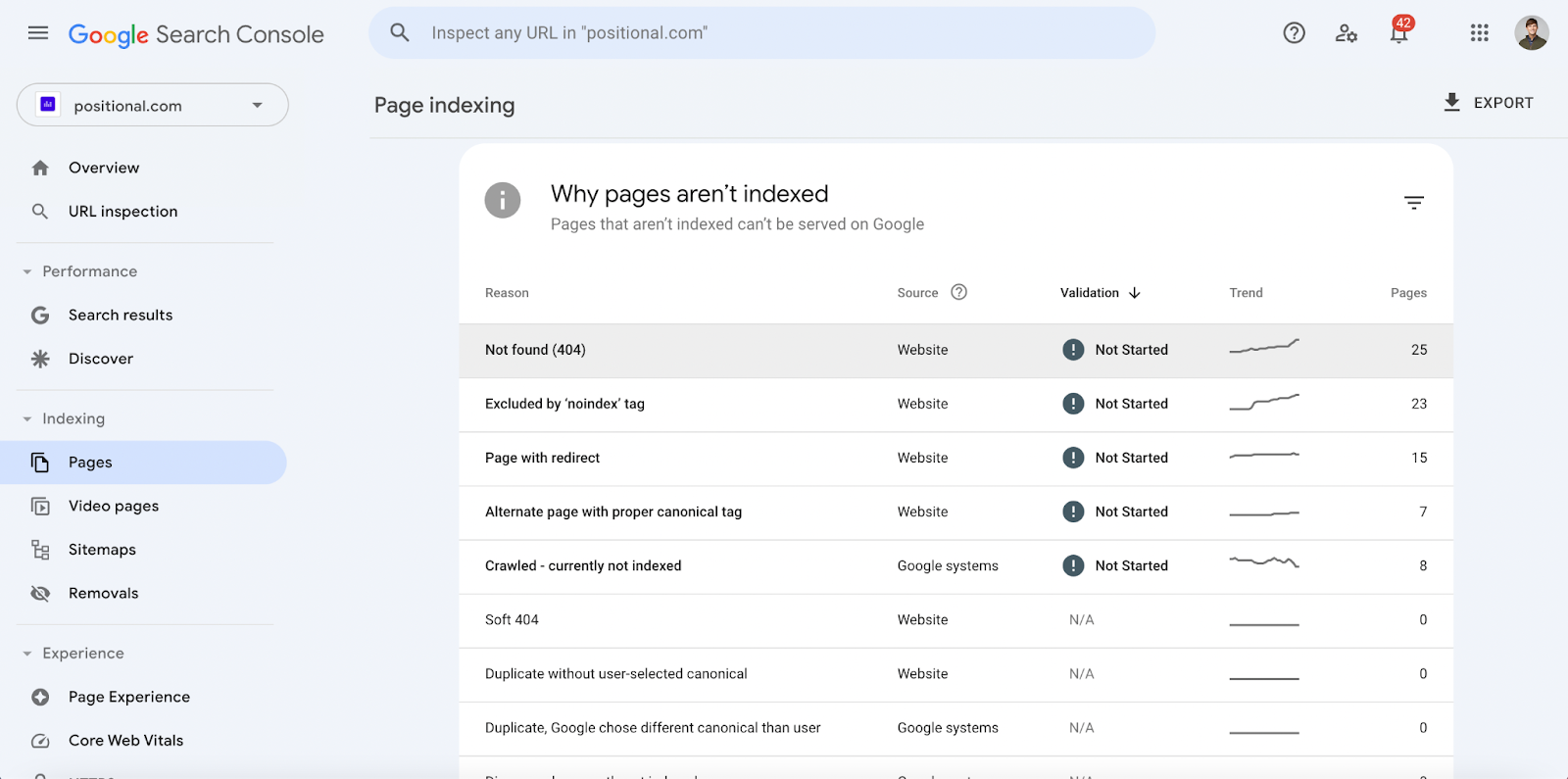

GSC will provide a table view of indexed URLs and a table view of URLs that are not currently indexed. For example, in our GSC account, we see that 78 URLs haven’t been indexed, and Google has provided a number of reasons as to why:

In our GSC account, we see that we’ve got a number of Not found (404) URLs, several URLs that are currently being excluded with the noindex tag, and a small number of pages that are designated Crawled - currently not indexed. (More on that later.)

You can then click into the table row to see the URLs by type of reason. And if you’d like, you can inspect the URL and then manually request indexing on the URL. You can also use the Google Search Console API to check the indexing status of webpages.

How to Improve Indexing

There are several different ways to improve indexing or the rate at which Google is indexing pages from your website.

Ensure That You’ve Submitted a Sitemap

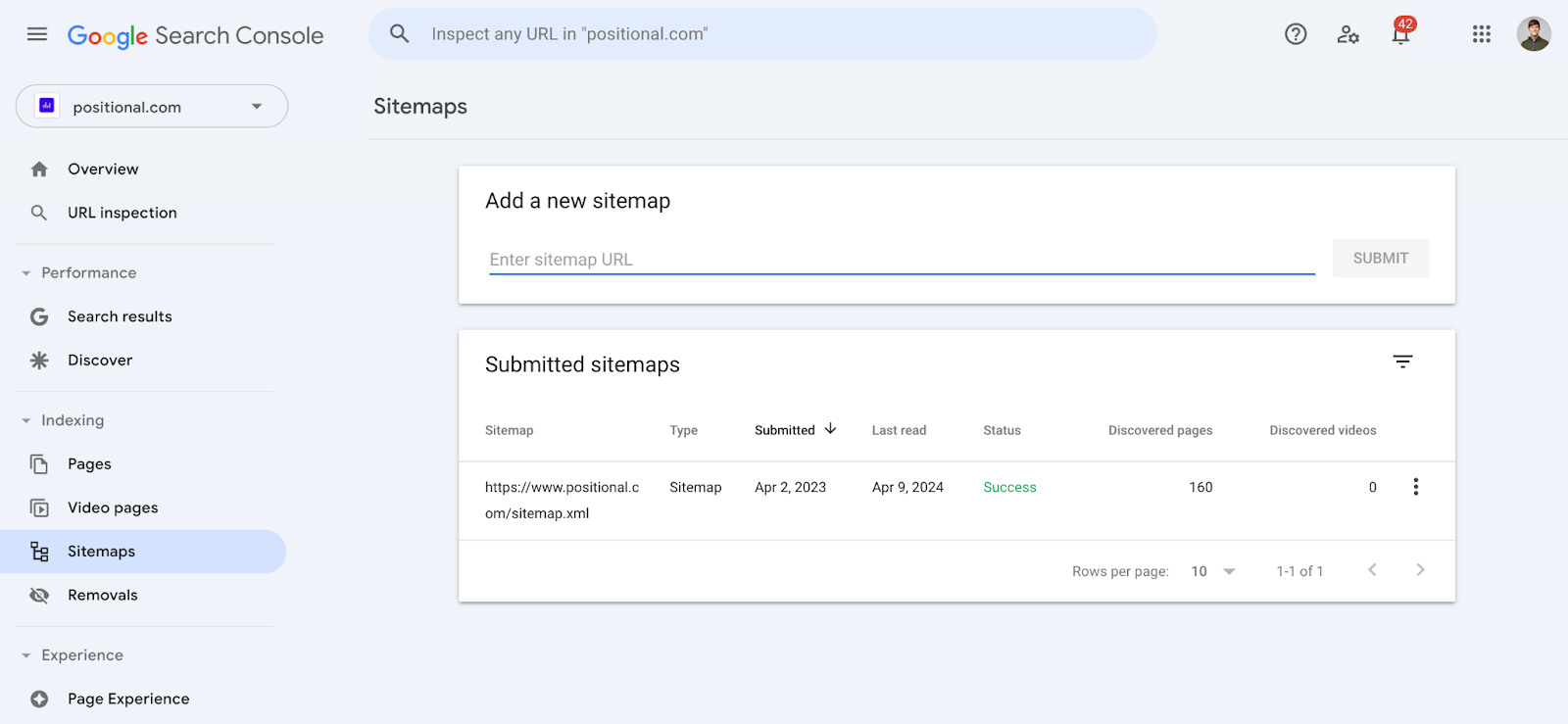

As a best practice, you’ll want to ensure that you’ve submitted your sitemap in GSC and that there aren’t any issues with the sitemap.

In GSC, go to Indexing > Sitemaps, and submit the sitemap URL(s):

After you submit your sitemap, Google will provide a status message — for example, Success, which indicates that they are able to retrieve your sitemap. Alternatively, Google may provide a status message suggesting that there is an issue with your sitemap.

Depending on your content management system, your sitemap may be updated automatically with new webpages as they are created. However, some systems still don’t automatically update your sitemap, so you’ll want to double-check this.

Request Indexing

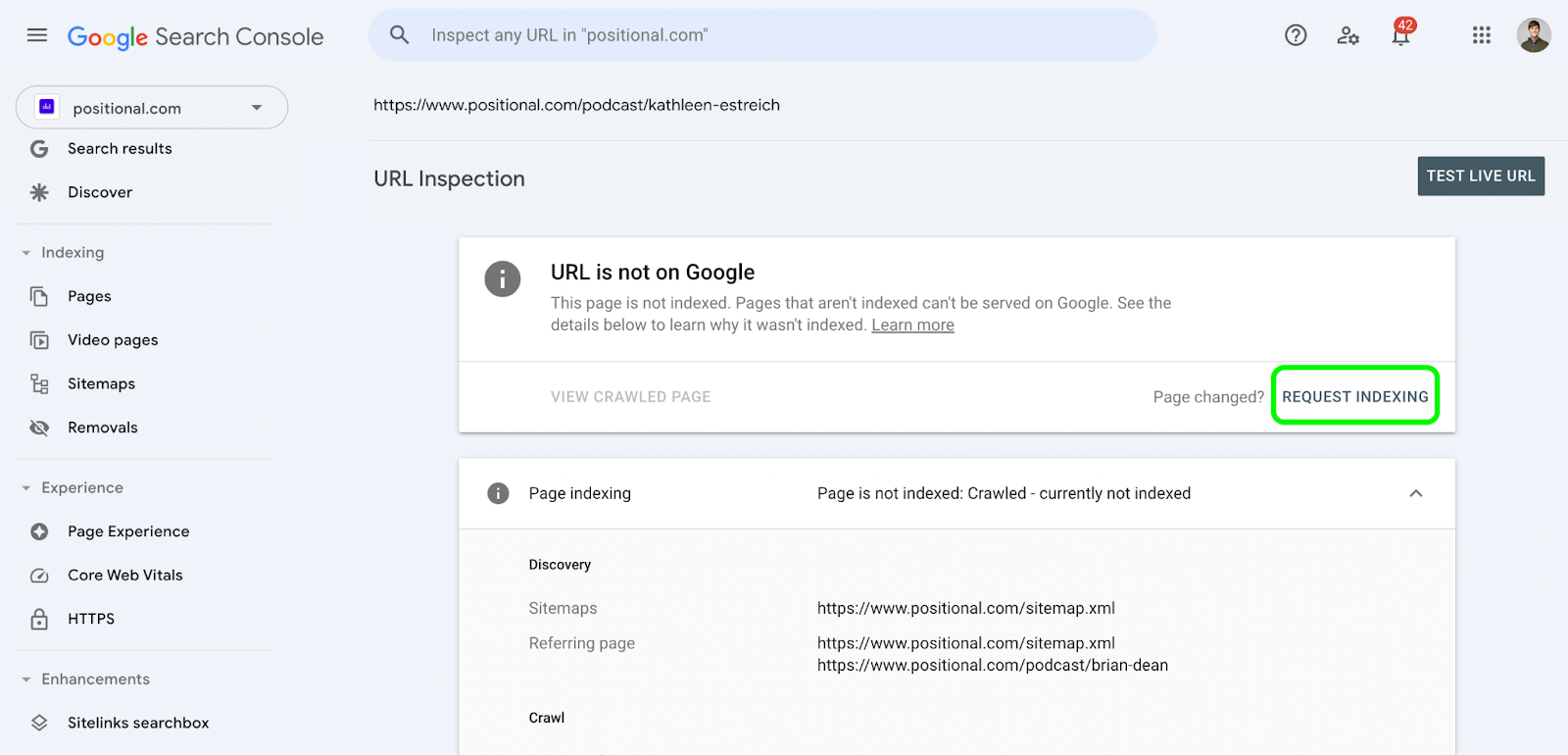

If you see in GSC that a URL isn’t indexed, you can always manually request that it be indexed, by inspecting it:

After you request indexing, Google will push your URL to the priority line for crawling and indexing. While this will work in most cases, it doesn’t work 100% of the time. And if your page doesn’t index after you manually request indexing, there may be other issues that you need to address.

Google will limit the number of pages that you can request indexing on per day. I’m typically able to request indexing on 10 to15 URLs per day, and it does take some time to manually go through this process.

Use Google’s Indexing API

If you’re creating a large number of pages for job listings or events, you can also use the Indexing API to request indexing of your pages at scale.

This is a very effective approach; however, using the Indexing API for pages that aren’t job postings or events isn’t recommended. That being said, several indexing tools will use the indexing API inappropriately to index pages that are not for job posting or events. I would advise using these tools with caution, as it is unclear how Google will handle such requests.

Create Fantastic Content Consistently

Getting into a rhythm is so important. If you create fantastic content consistently — for example, a couple of times per week — Google will start to pay more attention to your website and expect new valuable webpages.

While you don’t need to be publishing content every day, publishing content on regular cadence signals to Google that they should be crawling your website more often.

If Google determines that you are publishing high-quality pages, they will index them and likely rank them faster.

So what is high-quality content?

I tell our customers that high-quality, fantastic content is in-depth, provides unique insights, isn’t duplicative or plagiarized, and is appropriately sourced. As Mike Haney said on the Optimize podcast, “What is your content adding to the internet?” That is the bar for our writers and me at Positional.

Run Editorial Alongside Programmatic

Programmatic SEO is an increasingly popular strategy. It involves publishing large amounts of programmatically generated webpages, typically using a data source. These pages will typically target long-tail keywords and could be part of a product-led SEO strategy.

If you’re pursuing a programmatic SEO strategy, my advice would be to run a traditional or content-led SEO strategy alongside it. This can be helpful for building topical authority and showing Google that the quality of your webpages is high and that they should spend the resources necessary to index your programmatic pages, too.

Finally, if you’re running a programmatic SEO strategy, you’ll want to roll into it. I wouldn’t suggest, for example, publishing 10,000 new URLs to your website overnight. Instead, I would publish in batches, scaling over time and monitoring indexing along the way.

Internal Linking Is Mission-Critical

Internal links help Google understand how all of your webpages are interconnected and which pages are important on your website. When linking internally, you want to make sure that you’re prioritizing internal links to the most important pages on your website.

In general, if you see a good opportunity to internally link from a new webpage to another webpage on your website, you should do so if it would be helpful for the user navigating your website.

If you’ve got a number of webpages failing to be indexed naturally, you could try adding internal links pointing to those URLs from other indexed URLs on your website.

You’ll often hear about site structure and SEO. Site structure simply means making it easy for users and Google’s crawlers to access or find the pages on your website.

Avoid Large Amounts of Thin Content

Thin content has many definitions, and there are many different types of thin content.

In short, a webpage with very little substance is thin content. Often, thin content means too few words on the page. However, thin content could also be content that provides little value, is duplicate content, is overly promoted or spammy, is a doorway page, or has a lot of AI-generated content.

Recently, Gary Ilyes from Google’s search team commented that Google looks at sitewide signals to determine the quality of a website and its webpages. Some SEOs will argue that Google assigns a quality score to all the pages on your website and that those individual quality scores roll up into a perceived quality score for your entire website.

If you have a large number of thin or unhelpful pages, in theory, you are discouraging Google from crawling and indexing the new pages you create on your website (because they’ll predict that the new pages are likely low-quality, too).

Crawl Budget

You’ll hear a lot about crawl budget. Crawl budget is typically defined as the amount of resources that Google will spend to crawl and index new pages on your website.

However, the truth is that for most website owners reading this article, worrying about crawl budget is likely a waste of time. Google has said that only very large websites need to worry about crawl budget — for example, large sites (1 million or more unique pages) or medium or larger sites (10,000 or more unique pages).

In the guide linked to in the previous paragraph, Google provides some recommendations for website owners. For example, you should avoid having unnecessarily long redirect chains, as that would consume unnecessary crawl resources and, as a result, prevent Google from discovering all of your website's pages.

How Often Is Google Crawling My Website?

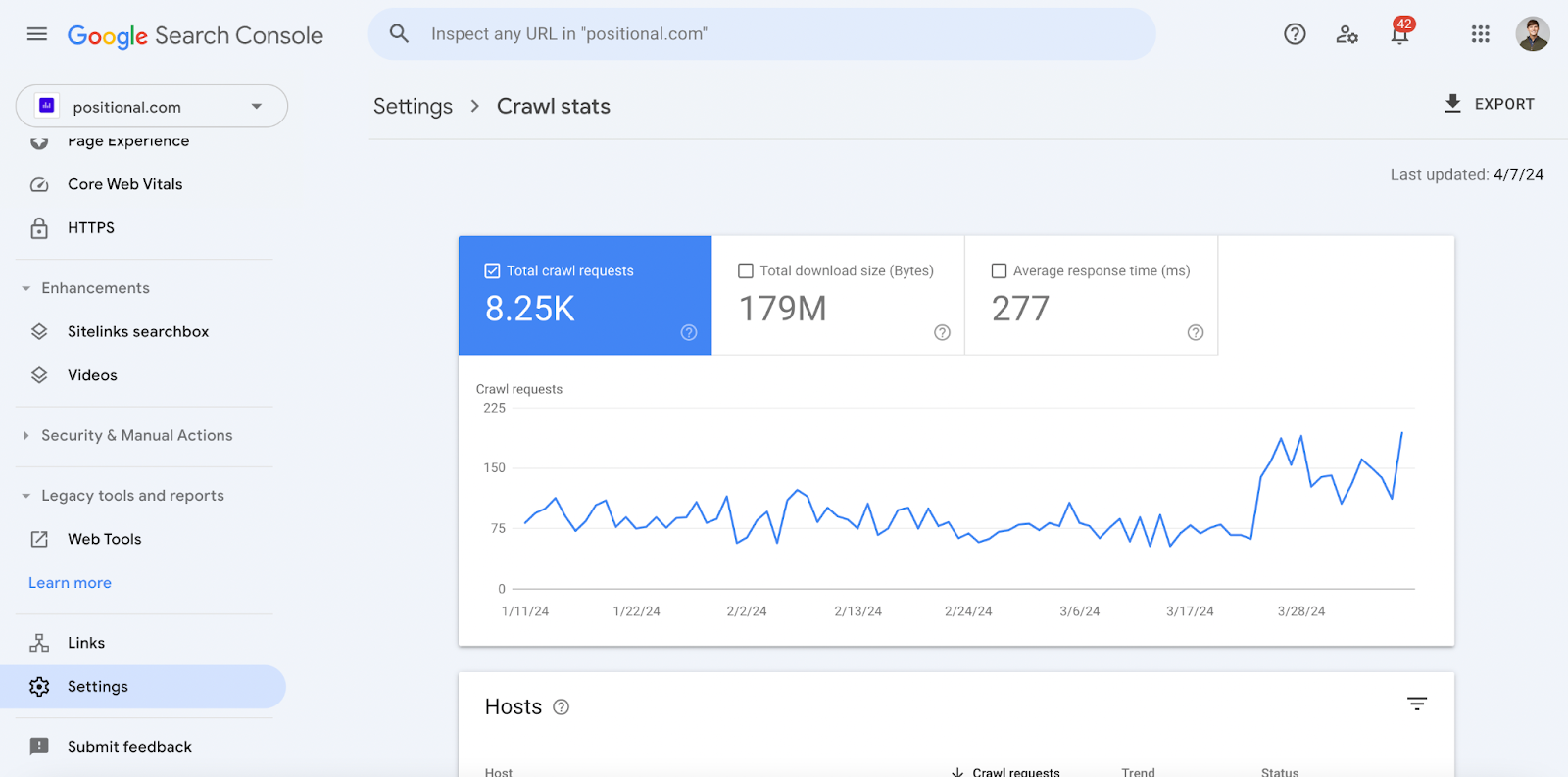

In GSC, under Settings > Crawl stats, Google provides data regarding the crawling of your website:

Google provides data on the number of URLs crawled, the reason for the crawl, potential issues, and a lot more.

You can also view crawling data for specific URLs on your website. If you inspect a URL, Google will provide the date of the last crawl:

You could also manually review the log files of your website to determine when Googlebot last requested specific URLs.

Common Indexing Issues

While we could address many indexing issues, and I’ve already highlighted a few, there are two issues worth calling out specifically. In GSC, at some point, you will likely see that Google has decided not to index a page and is denoting that the page is either Crawled - currently not indexed or Discovered - currently not indexed.

Crawled - currently not indexed

Under Indexing, Pages, and Page Indexing, you’ll see the reasons Google has provided for not indexing a page. As of this writing, you’ll see that we’ve got seven pages listed as Crawled - currently not indexed:

This technically means that Google has crawled these URLs but decided not to index them as of the last crawl.

On our site, most of these pages are pages for episodes of our podcast, which are actually quite thin, given that they’re currently missing the episodes’ transcripts. As a result, there is very little context for Google to go on to decide that they’d want to index these URLs. So this makes sense.

Discovered - currently not indexed

Discovered - currently not indexed means that Google has yet to crawl a URL, so they wouldn’t have indexed it.

The first step would be to get Google to dedicate its resources to crawling these URLs. In the article linked to in the previous paragraph, I walk you through the process of troubleshooting this issue.

If you’ve got a large number of URLs marked as Discovered - currently not indexed, that likely indicates either a page quality issue or that you’ve recently published a very large number of URLs but Google hasn’t decided to dedicate resources to crawling them yet.

How to Remove Pages from Google’s Index

At times, you might urgently need to remove a URL from Google’s index, and fortunately, there are a couple of different ways to do this.

Request Removal

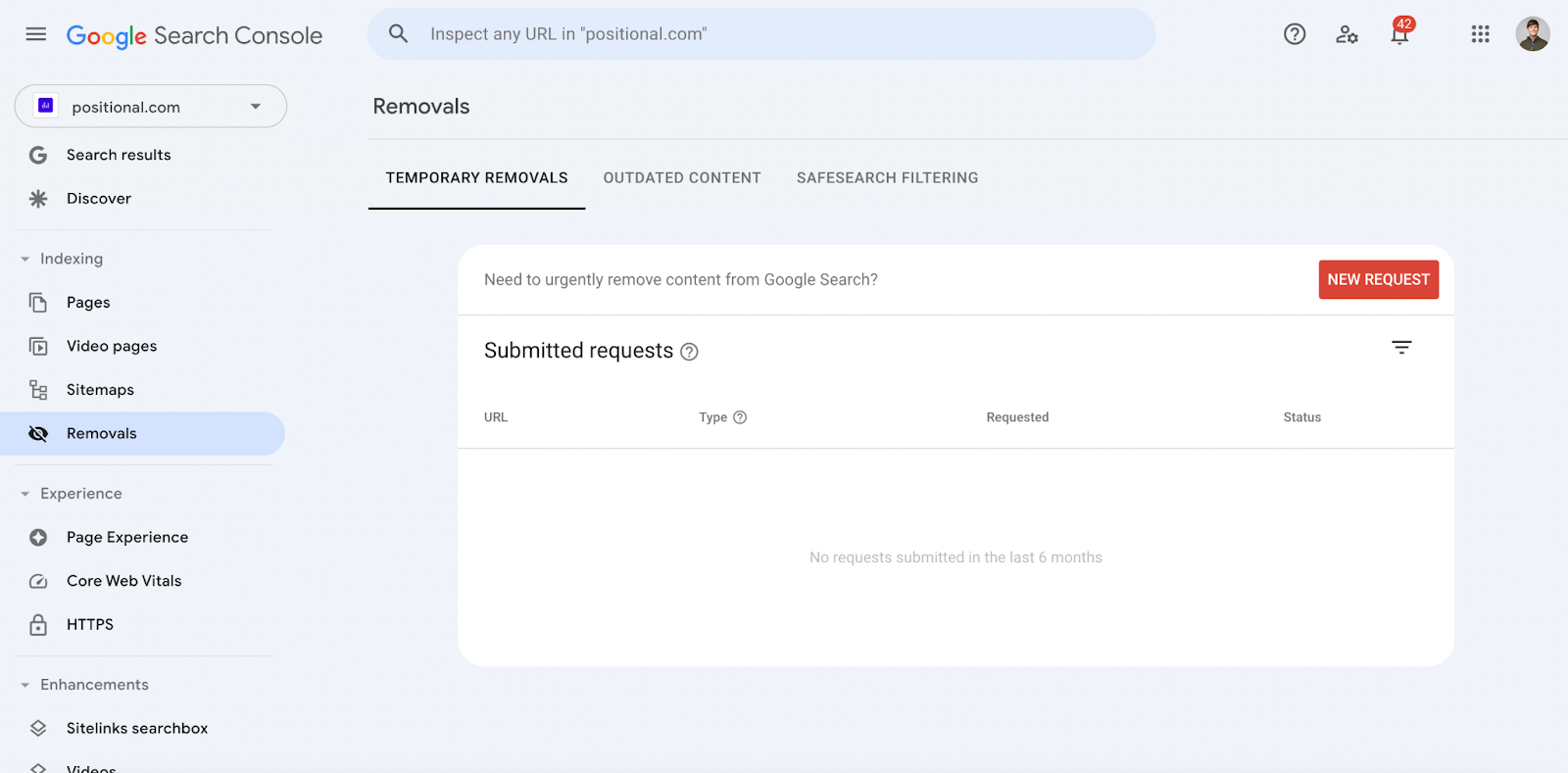

In GSC, you can request that Google remove certain indexed URLs from Google Search. Head to Indexing > Removals, and create a New Request:

You can select specific URLs or group URLs by using a specific prefix. Google provides documentation on how to use this feature in Google Search Central.

Robots.txt and Noindex

As an alternative approach, you could use your robots.txt file and noindex attributes to communicate to Google that a specified URL shouldn’t be indexed.

Google uses robots.txt to determine which pages it should and shouldn’t crawl. You can set up rules within your robots.txt file to communicate with Google which URLs or paths shouldn’t be crawled.

You could also place a noindex tag on your pages to instruct Google not to index a particular URL. Google provides a detailed guide on how to implement noindex tags in Google Search Central.

Final Thoughts

Without indexing, there’s no SEO. Before a webpage can rank or appear in Google’s search results, that URL has to have been crawled and then indexed by Google.

As we’ve discussed, it can often take time for Google to discover and index a website’s new URLs. To make Google’s life easier, you’ll want to submit your sitemap in Google Search Console and include your sitemap in your robots.txt for other search engines to access.

GSC provides many helpful tools for identifying indexed or non-indexed pages. These tools typically provide reasons for why your URLs may not be indexed.

Indexing cadence should improve over time as the size of your website grows in popularity. And there are some steps you can take to improve indexing — for example, prioritizing internal links within your webpages to other webpages on your website.

In my experience, most indexing challenges are a result of websites having a large amount of low-quality content. If Google is crawling your pages and deciding not to index them, that’s likely a signal that you need to make changes to the webpages themselves and to think critically about your website’s structure.